EM12c and Alerting Check, Double-Checks and Where Else Can I Check?

Metric settings, alerting, notifications and escalations have been enhanced in EM12c to support the demands that are falling heavier on the DBA and the larger support scale of the database environment, (ASM, Exadata, middle-tier systems, etc…) As frustrated as I know some folks became when trouble-shooting alerting issues in previous versions of the Enterprise Manager, it should be acknowledged that EM12c has many new features and a new interface for this important part of the Enterprise Manager.

I know many times, I’m so busy keeping my eye on the ball, that I can miss what’s stealing third base right under my nose. This has always been a challenge for me and from discussion, a number of DBA’s when in the middle of large migrations. I completed a while back, “a low target count, high quantity/complex job” EM12c migration and as such was the case, ended up with a very small issue building into the perfect storm.

The migration was multi-step, the first servers and jobs migrating over quite seamlessly and without any issues, even with demanding job run schedules, I was able to migrate everything over and have it up and running without any downtime to the customer. The last move was a RAC cluster with 40+ jobs, including backup and maintenance jobs that all needed to be created manually in the new EM12c, as this was the only supported process for moving the previous EM12c from one server being taken out of service to a new one to replace it. I completed the transition and through the night, the only issue appeared to be a few jobs that had been created with credentials that the DBA team did not possess and required a few simple changes to work-around the issue in the new environment.

The next day, no issues were seen in the beginning morning hours and all appeared to be working well, then there was a notification of the database experiencing issues from app support. Logging into the system, I was able to quickly access a hung archiver problem and started to research how to address most efficiently. I allocated more space to the FRA, but the FRA was on an ASM disk and had hit the limit on space on the target in question. Inspecting the backups, the migrated backup job had failed on execution to the tape settings and had switched to write to the FRA location. During this time, the sessions in the system had piled up and I was unable to connect with RMAN to delete the backup, either through the EM console job or the command line. I could see the backup pieces residing in the ASM location with ASMCMD and with time running out and access to the production environment compromised, I removed the files “the hard way”.

I still could not switch a logfile- the system was simply refusing to release the space to the FRA so that archiving could resume. This was a 24X7 system for the client and as it would have it, this was the busiest time of the day for them. I notified the customer that I would likely have to cycle the database to force the database and server to recognize the freed space and they agreed. Post the cycle, the re-allocated space was quickly recognized and immediate archiving commenced. I performed crosschecks of the backups, cleaning up from my earlier forced cleanup and removed the job that were failing and re-directing to the Oracle choice of the FRA destination.

So, we now need to circle back to what I have concerns with and feel is a disconnect in the default configuration with the alerts and notifications in EM12c. As soon as the backup job failed due to it filling up the FRA location when it could not write to the SBT_TAPE local, I should have been notified by EM12c. This is not like a job that I built in the EM job library where I choose to be notified or not. There isn’t a section that asks if I want to be notified and I would think it would do so automatically, as important as backups are.

I also received the following errors in the alert log when the FRA filled up again from archiving:

ORA-19809: limit exceeded for recovery files ORA-19804: cannot reclaim 536870912 bytes disk space from 107374182400 limit ARC0: Error 19809 Creating archive log file to '+ORAASM02' ARCH: Archival stopped, error occurred. Will continue retrying ORACLE Instance <sid> - Archival Error

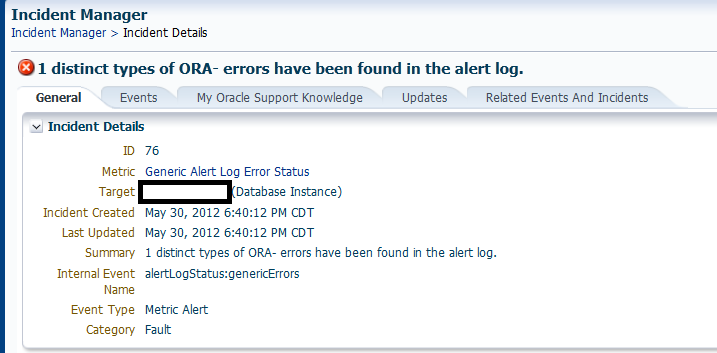

The above errors did not send out an alert notification email, even though an incident was created. I was highly concerned as to what was mis-configured to cause this and as this is a brand new EM12c with the latest bundle patch, I was concerned that this was the result of the current configuration for anyone implementing the newest release.

When first setting up your EM12c, you will fill out your notification methods and email addresses, ensuring that you are set to receive emails and who will receive them during the correct monitoring window. The next important piece and this is one of the areas that caused the problem, is to look at the default rule sets. I always recommend copying and editing the rule set to fit your needs, but you need to also look a little deeper into what the rules are doing individually, otherwise you may end up in the same scenario I did.

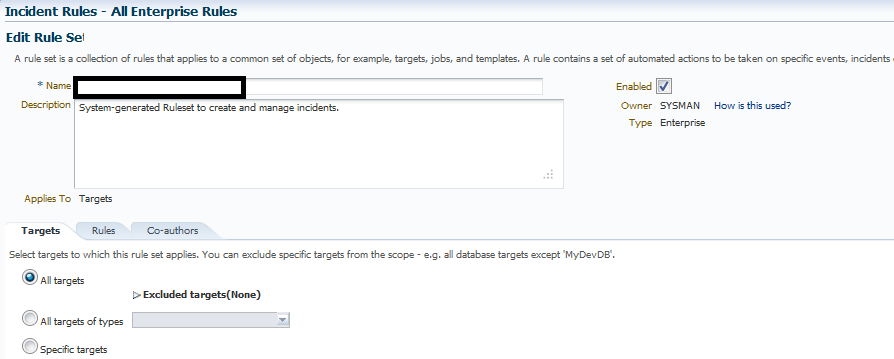

You first inspect your rule set, (a copy of the original, so the default rule set will appear the same as the one you see below.)

Is it enabled? Does it exclude any targets? These are all valid questions, unfortunately, this was not the case:

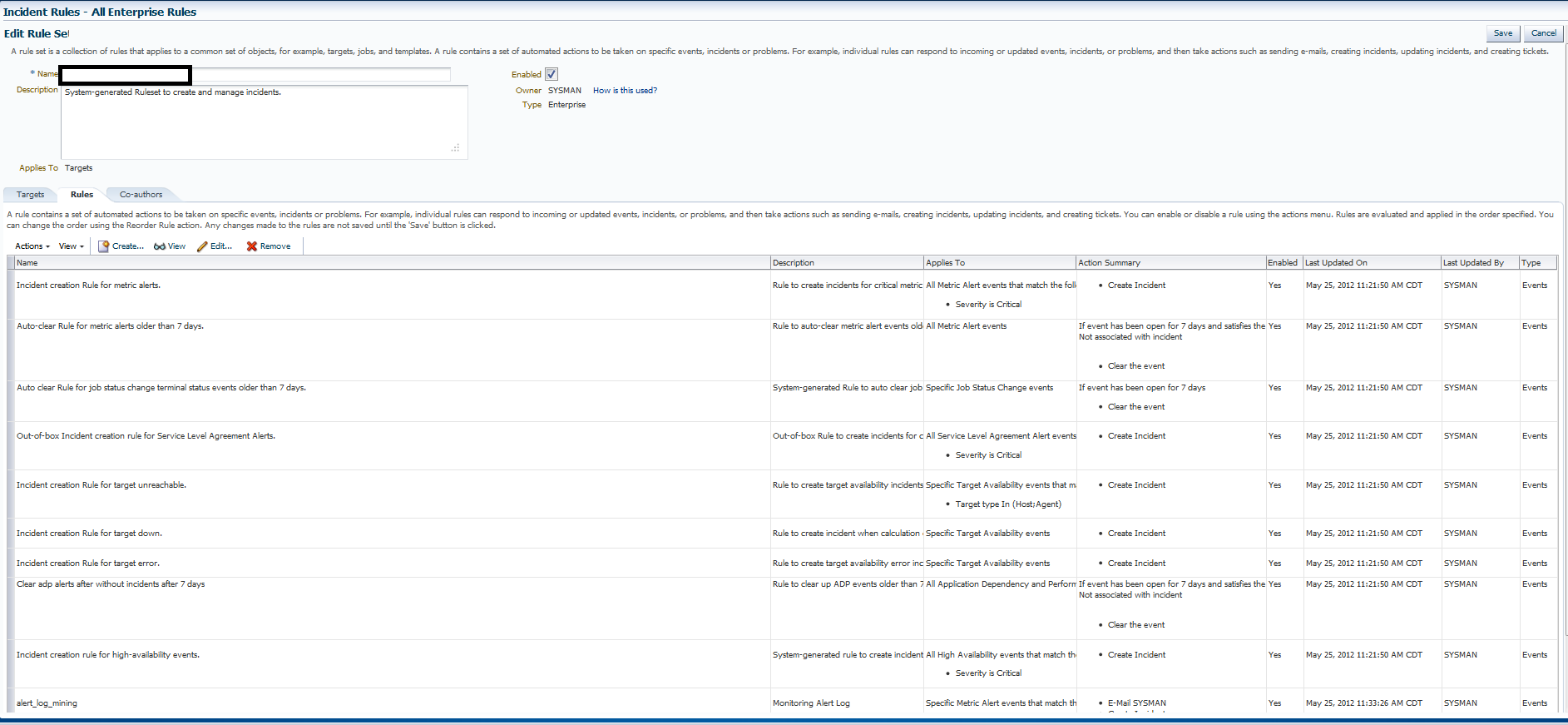

Are there rules missing, not sending emails for the problem?

More valid questions, but no, this was also not the case:

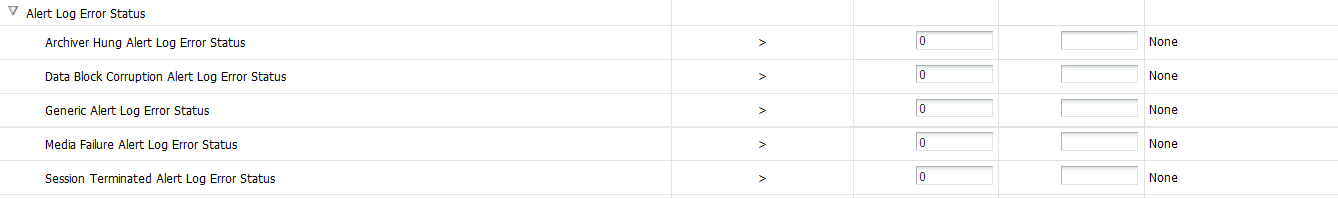

So now we venture onto metric settings. If our rules are correct, our incidents are creating, what is it about the metrics that don’t email?

What you see below is just the section of metric settings for the errors above and note the left column with metric settings of “0” are warnings, where critical is set to null. There are no settings for critical!

If you look back up at the rules, you will notice they are all set to alert when “Severity is Critical”.

If there are no metric settings for critical, then no response with emails will be created. The rules do go on to create incidents, but again, no generation of alert notifications to the DBA to address the problem. The easy fix for me, as I did not want to add to what I already had in rules, was to edit the metric settings and include “0” in the critical columns as well. The EM will warn you that they are set the same, but for this type of metric alert, it functions fine and I was able to test successfully by forcing an ORA- error into the alert log:

SQL> exec dbms_system.ksdwrt(2,'ORA-00600:

Test message, verifying alert log monitoring in EM12c');

PL/SQL procedure successfully completed.

Viewed the alert log:

Wed May 30 18:26:53 CDT 2012 ORA-06512: Test message, verifying alert log monitoring in EM12c

I then uploaded the agent to speed along the alerting process, (I’m so impatient…)

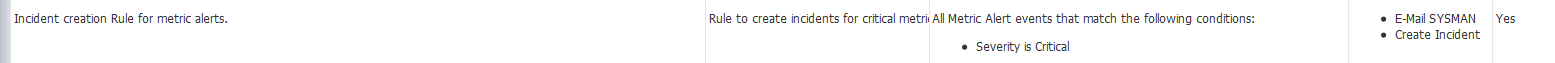

Email received and the incident shows, the alerting mechanism now works with the rule set:

With the new EM12c, (this includes the latest bundle patch), GO THROUGH and inspect ALL OF THE METRIC SETTINGS for CRITICAL VALUES. The rule set unless you update your rules to either alert on warnings, which to me, creates a lot of extra paging or add secondary rules to look for warning alerts for these metrics that are crucial to any DBA monitoring an environment.

Thanks very much for the post. My current issue is that I don’t receive any email for availablity or any other issues I set rules for. I did before with system generated rule sets susbscribed but later I unsubscribed them and thus I could not get back. I could not receive any emails for whatever.

My notification part is set up ok as I did get emails before.

Thanks

Nancy

Hi Nancy,

1. You receive test emails.

2. If you look in the rule sets, the system generated rule sets are currently disabled?

3. You have copies or created your own and those did not work and you now were hoping to go back to the system generated ones?

If this is correct, then I would recommend do the following.

1. Click on the system generated rules, click on “Create Like”, name the rule set and save.

2. Edit the rules, individually, adding the notifications for the ones that you want to have send you alerts, (critical alerts, availability.)

3. Save the changes

4. You can then test out availability, etc. and verify that you now receive emails.

Let me know if this corrects your problem.

Kellyn