EM12c and Windows OS Failover Cluster- Keep it Simple

This is going to be a “KISS”, (Keep it Simple, Silly) post. I was offered a second Windows cluster to test on when my original one was required for QA. It was a gracious offer, but I also found out why a cluster should be kept simple and this cluster was anything but simple. Many times, issues are created and we’ll never see them coming until we go through the pain and the EM12c on this cluster has been both “educational” and painful.

The failover cluster is a Windows 2008 server with three Central Access Points, (CAP). Each CAP has a drive assigned to them. The drives are 20Gb each, so they aren’t TOO large when we are talking an EM12c environment build with an OMR, (Oracle Management Repository) also residing on the same host.

It may get confusing for some folks fast, so I’ll try to build a legend to help everyone keep it all straight.

The cluster is cluster-vip.us.oracle.com.

The nodes in the cluster are node1.us.oracle.com and node2.us.oracle.com

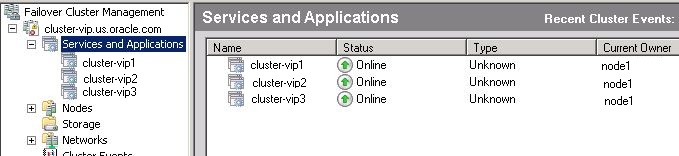

The CAP’s are cluster-vip1.us.oracle.com, cluster-vip2.us.oracle.com and cluster-vip3.us.oracle.com. They each have one shared drive allocated to them that can fail over:

- F:\ = cluster-vip1.us.oracle.com

- G:\ = cluster-vip2.us.oracle.com

- H:\ = cluster-vip3.us.oracle.com

This should start sounding like an Abbott and Costello skit pretty quick, so I’ll try to explain as much as I can and hope that each reader has some knowledge of Windows clustering… 🙂

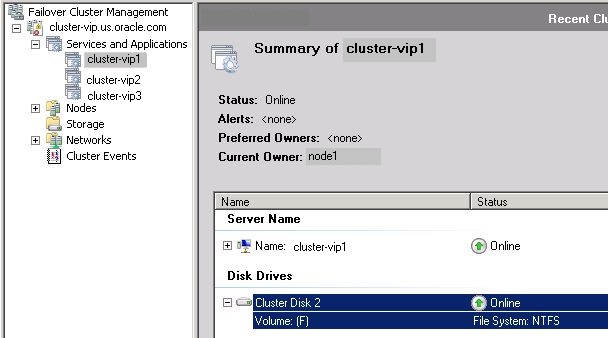

Each failover cluster CAP will appear like this in the cluster manager:

Due to the size of each shared volume, we’re going to install the database on cluster-vip3, (H:\ volume) and the EM12c console and repository on cluster-vip2, (G:\). Cluster-vip1, (F:\) will be left available for database growth.

As our first step for installing on a Windows cluster, we need to set the %ORACLE_HOSTNAME%, (of for Unix/Linux $ORACLE_HOSTNAME) environment variable. For the database, it’s going to be cluster-vip3.us.oracle.com, as that is the hostname associated with the volume, (H:\) we wish to install on, (anyone already seeing the dark road we are starting down on? 🙂)

After the installation of the Oracle binaries, (software) and the database is complete, you need to verify that the listener is configured correctly and the database is able to be connected via TNS, (“sqlplus / as sysdba” won’t cut it, EM12c must be able to connect with “sys/system/sysman@<dbname>).

This will most likely require a manual edit of the tnsnames.ora file to replace the hostname in the connection string with the IP Address:

EMREP =(DESCRIPTION =

(ADDRESS_LIST =

(ADDRESS = (PROTOCOL = TCP)(HOST = <cluster-vip3 IP>)(PORT = 1521))

)

(CONNECT_DATA =

(SERVICE_NAME = EMREP)

)

) LISTENER_EMREP =

(ADDRESS = (PROTOCOL = TCP)(HOST = <cluster-vip3 IP>)(PORT = 1521))

Now you might say, “but they are all on the same host!”, but the cluster doesn’t see it this way due to the way the CAP’s have all been configured. Each drive is a different CAP and must be identified by their hostname or IP address.

Now here’s where the fun starts- You now need to configure the %ORACLE_HOSTNAME% environment variable to the cluster-vip2.us.oracle.com for the EM12c installation. Remember, our EM12c is going to reside on the G:\ volume of shared storage.

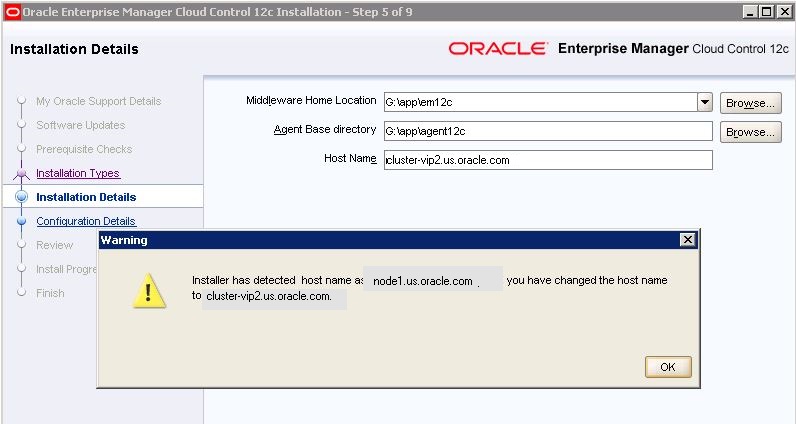

So we now set our environment variable, restart the cluster, bring the cluster services all back online on node1, bring up the database, listener and then start the EM12c installation. The install will auto-populate the node1 name, but you need to change it to the %ORACLE_HOSTNAME% environment variable, which will now be set to cluster-vip2.us.oracle.com:

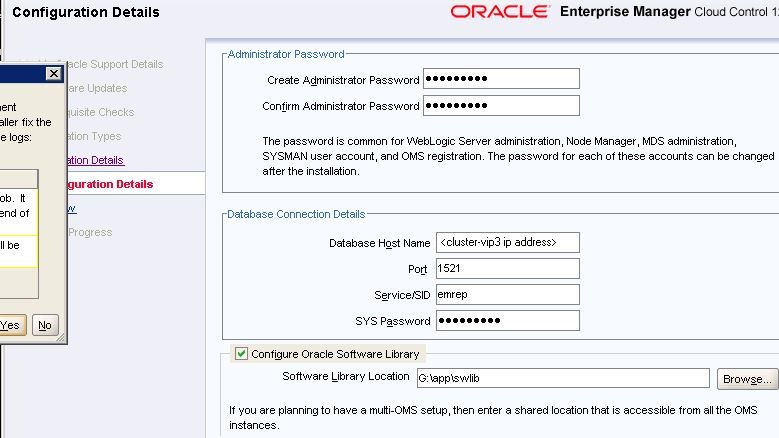

It will verify the new ORACLE_HOSTNAME, (unless this matches the ORACLE_HOSTNAME environment variable, it will not succeed!) and the installation then asks for information about the database you want to use for your repository that is actually identified as being on a different VIP:

There is a common step, even though you may have created your database without the dbcontrol in your repository database, (OMR) that will ask you to uninstall the dbcontrol schema so the EM12c SYSMAN schema can be installed.

The command looks like this:

<Database ORACLE HOME>/bin/emca -deconfig dbcontrol db -repos drop -SYS_PWD <Password> -SYSMAN_PWD <Password>

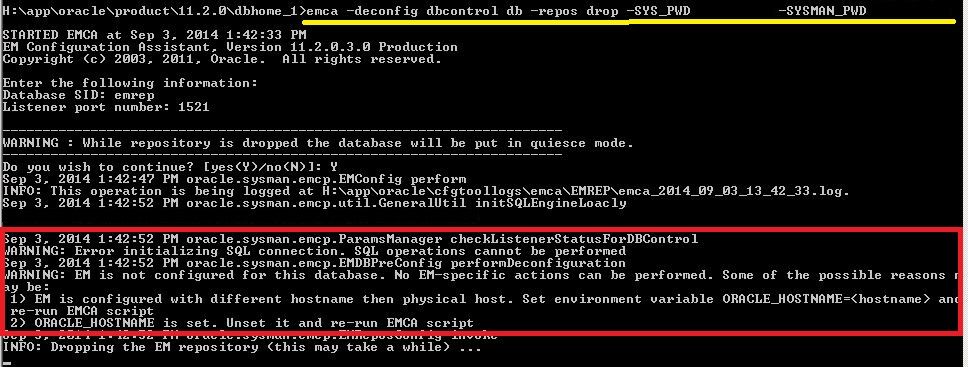

If you attempt to run this, with the ORACLE_HOSTNAME set to the correct address for the G:\ drive, (cluster-vip2.us.oracle.com), but the database is installed on the H:\ drive, (cluster-vip3.us.oracle.com) the following error will be seen:

It will act as if it’s run the removal, but if you log back in, the SYSMAN schema is still intact and no removal is performed. The trick here is that the command should be copied or saved off to run again from the command line and the installation for the EM12c should be cancelled.

Why? This step has to be performed with the %ORACLE_HOSTNAME% set to the database VIP again, (cluster-vip3.us.oracle.com) instead of the EM12c VIP, (cluster-vip2.us.oracle.com.)

There is a number of steps to complete this:

- cycle node1 of the cluster to set the environment variable.

- bring the cluster services back to node1 that would have failed over to node2.

- bring the database and listener services back up.

- start a command prompt, run the command to remove dbcontrol.

- set the environment variable back to the cluster-vip2 for the em12c installation.

- cycle node1 to put the environment variable back in place.

- bring the cluster services back over to node1, (there are three service, one for each drive, not just one with this configuration.)

- bring the database and listener back up, (again!)

- restart the EM12c installation.

Yeah, I wasn’t too thrilled, either! I now was able to run through the installation without being hindered by the dbcontrol step that is automatically hit, even if I chose NOT to install dbcontrol when I created the database… 🙂 The rest of the installation to node2 can be completed and failover testing can begin!

So, the moral of the story- Don’t create three VIP’s for three shared storage drives on one failover cluster. It’s just going to annoy you, your installation and even more so, me, if you come and ask me for assistance… 🙂