RMOUG 2015 Training Days Review

I’m at HotSos Symposium 2015 speaking this week, so thought I would blog about the results of the conference I’m the director of and that finished up just two weeks ago. I’m not admitting to being overwhelmed by OEM questions here, as I’m rather enjoying it. I love seeing folks so into Enterprise Manager and look forward to more! Keep the ideas for more blog posts coming! I’ll write on all these great topics in upcoming posts.

Rocky Mountain Oracle User Group, (RMOUG) Training Days 2015 is over for another year, but the conference is a task that encompasses approximately 10-11 months of planning and anywhere from 120-200 hrs per year of volunteer work from my as the Training Days Director. This in no way includes the countless hours that are contributed by Team YCC, our conference connection who helps us manage the conference or the invaluable volunteers from our membership that assist us with registration, driving speakers to and from the airport, as well as being ambassadors for our 120+ technical sessions.

Post each conference I am director for, I compile a ton of data that assists me in planning for the next year’s conference. This starts immediately after the current year’s conference and comprises not only the feedback that is offered to me in the conference evaluations, but spoken and email feedback that attendees and speakers are kind enough to share with me. I find this data crucial to my planning in the next year, but there is an important set of rules that are utilized to ensure I get the most from the data. I manage the conference very similar to the way I manage database environments and data is king. I’m going to share just a small bit of this data with you today, but it will give you an idea of the detail I get into when identifying the wins, the opportunities and the challenges for next year’s conference.

One of the major changes I had implemented to the evaluations was based off a conversation with many of the Oak Table members on values offered. When given the opportunity to grade a venue, speaker or event on the following:

- Very Satisfied

- Satisfied

- Unsatisfied

Reviewers were more inclined to choose “Satisfied” of the three options. It was easy, didn’t demand a lot of thought into their choice and a choice of 1-10 values would result in more valuable data in my evaluations. Being the glutton for punishment I am and finding logic in the conversation, I chose to update our evaluations to the 1-10 vs. the above choices or 1-5.

It’s been a very “interesting” and positive change. Not only did it bring up our scoring from “Satisfied”, which was an average rating, to higher marks overall, but we received more constructive feedback that can be used to make the conference even better next year.

Colorado Convention Center

Although we are continually searching for the best venue for the conference, we receive positive feedback on the Colorado Convention Center. Our attendees appreciate the central location, the opportunities to enjoy all the restaurants, entertainment and such in the downtown area. The Colorado Convention Center offers us the world-class venue that a conference of our size deserves. Our speakers find little challenge to gaining funding to travel and speak because of the location, too.

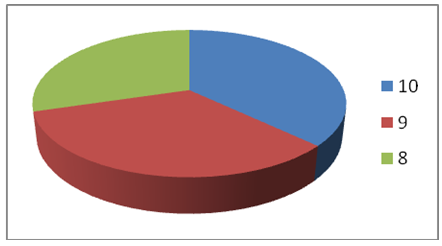

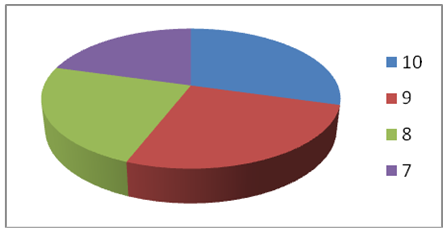

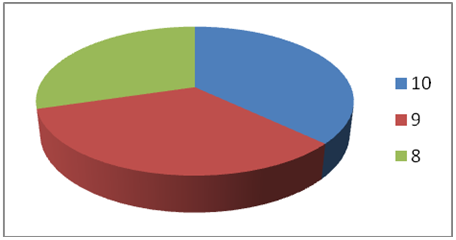

Notice that we don’t have any scores from 1-10 that are under 8!. Our average score was 9.57, so pretty impressive. That was also the overall average with how often they let us down on coffee/tea and other refreshments between sessions,(yeah, I’ll be talking to them about this, as I feel it’s very important to have during those breaks.)

Project O.W.L.

This was part of the new marketing initiative I put together this year for RMOUG. New additions at conferences are always unnerving. We had RAC Attack last year, but to create a new exhibitor area, new sponsorships and attendee participation opportunities, you hope every group will get what they need out of the initiative. We added Rep Attack, (Replication from DBVisit), Clone Attack,(From Delphix) a hardware display from Oracle and a Stump the Expert panel from OTN, who also sponsored our RAC Attack area.

We did pretty well with Project O.W.L., (which stands for Oracle Without Limits) but we learned from our evaluations that our attendees really wanted all those “attack” opportunities on the first ½ day, during our deep dives and hands on labs.

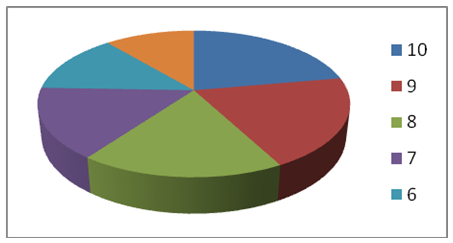

The reviewers didn’t complain about any of the “Attack” sessions or hardware displays, but gave lower scores, (down to 6, on a scale of 1-10) due to scheduling changes they really wanted to see for this new event offering.

Length of Conference

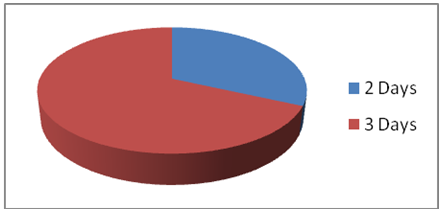

We have offered a 2 ½ day conference for the last couple years, after having a 2 day conference and an additional hands on lab ½ day previously. Last year we started offering a single day pass for those few that were unable to join us for the entire event. This offered us additional flexibility for our attendees and we noticed that only about 35 people take advantage of this, but it removed the challenge we had for those sharing badges, which impacted our “true attendance count” when working with the Colorado Convention Center on our next year’s contract and it also increased the amount of folks that asked for a longer conference:

Currently, 65% of our attendees who’ve filled out their evaluations, would like to extend the conference to a full three days. I’ve also seen some benefits of separating the Hands on Labs/deep dive sessions by development vs. Database focused to get the most out of the three days. This would mean that during the DBA sessions, we would have the development centric deep dives and HOL and vice-versa for the DBA deep dives/HOL. This scheduling would allow us to add another track, which our current comments list an interest in DevOPS and VMWare or Hardware.

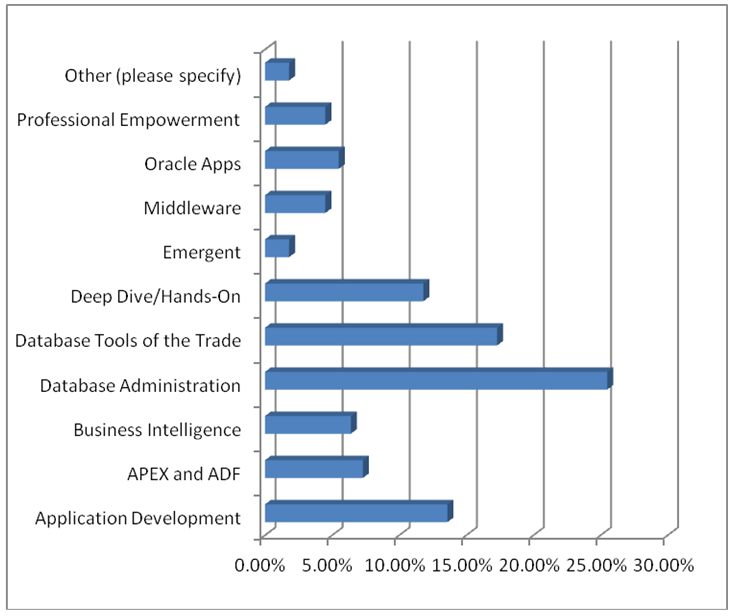

Amount of Sessions

We consistently have comments saying, “Not enough DB12c” and the next might say, “Too much DB12c”. Below that is a comment asking for more development sessions, followed by someone asking for less development. This is expected and actually tells me when I’m in my “sweet spot” of session scheduling. Our tracks closely match our attendance designated roles, so we know we are doing well with our schedule.

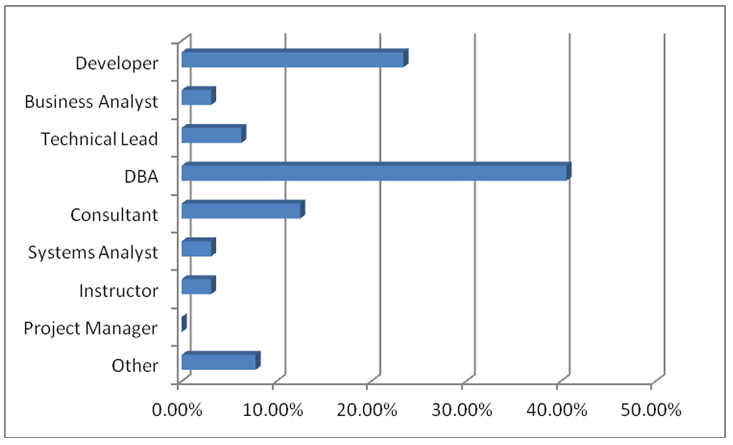

Percentage of Sessions for Each Track

Roles that Attendees Hold

If you mix and match the session percentages that are in our conference vs. the roles that our attendees hold, you will see that we have an excellent balance of sessions that match the amount of those that will be interested in it.

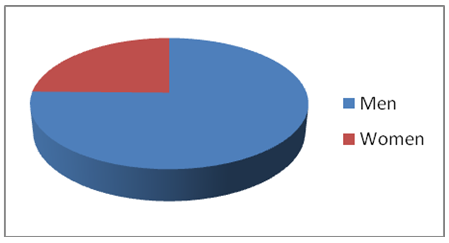

Women Attending Training Days

The reason I started Women in Tech at RMOUG was that I did a count, (we don’t collect information on the gender of our attendees, but I can either tell by name or by knowing the person, which allows me to count about 97% of our attendees.) and was aware that we only had 7% attendance by women. With the introduction of the WIT sessions, we have now increased our attendance to over 22%.

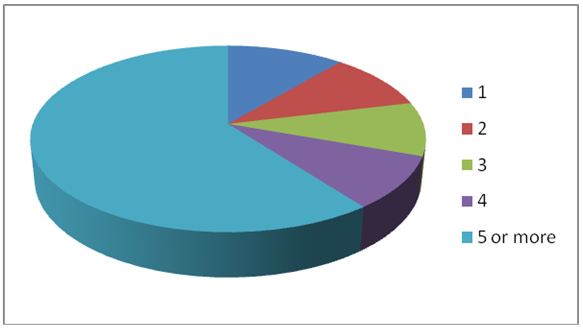

New Attendance

We do ask our attendees how many Training Days folks have attended. I noted a number of folks that felt the people they’d always seen at the conference were no longer attending and we’d noticed that, as with many Oracle User Group conferences, the attendees are “aging out”. Gaining new attendees through new Oracle customers, startups and new technologies is essential. Knowing if you are successful is important, too.

Currently, 40% of our attendance have attended four or less Training Days, which tells us we are making head way in introducing our conference and RMOUG to the area. We still are retaining 59% of our long-term attendees, (we do have some who have attended most of our 26 conferences, too!) Keeping both groups satisfied are also a big challenge, so again, this data shows us that we are doing a very good job.

We had an average of 8.85 rating on session quality on a scale of 1-10 and most of the complaints were when anyone and I do mean anyone thought they could get away with marketing in their sessions. No matter how often we let people know that marketing is very frowned upon by our attendees, abstract reviewers offering low scores for any abstract that appears to have any marketing in their session, someone still tries to push the marketing card. The session level evaluations won’t be out for a little while, but I already fear for those that were called out at the conference level for marketing or sales in their technical session and those were the ones that created a majority of the percentage of 7 scores.

Session Quality

We couldn’t have the great speakers, topics and quality of sessions without our great abstract reviewers and committee. We have around 50 reviewers, made up of local attendees, ACEs and Oak Table Members. This provides us with the best over all scoring. We ask people to only review those tracks that they are knowledgeable in and to never review their own abstracts or those that may be considered a conflict of interest. Even my own abstracts are submitted for review and then I pull all mine, knowing that I’ll be onsite and if I need a last minute replacement, it comes in handy to slip one of mine or Tim Gorman’s in, as we have a few that have been approved. I’m commonly quite busy and prefer to give as many speakers an opportunity to speak, so I have no problem pulling mine from the schedule unless absolutely required.

We achieved an average scored of 9.57 on session quality out of a score 1-10, so this tells you just how effective our abstract review and selection process is. I applaud and recognize our abstract reviewers and thank them for making my job so easy when it comes to, not only choosing our abstracts for our conference, but if someone asks why they weren’t selected, the scores and comments, (sans the reviewer names, those remain between the committee and myself) offer feedback to assist the speaker in how they might change their abstract submission in the future for a better chance of getting accepted. We do receive over 300 abstracts per year and can only accept around 100, so we are forced to say no to 2/3 of our abstracts submitted.

Overall, our registration count was up for paying attendees, which is a rare thing for user group conferences. Our number of volunteers also increased, (which is crucial to our conference success.) RMOUG is a non-profit that relies on the power of our great volunteer base. These volunteers drive many of our speakers from and to the airport, register attendees and serve as ambassadors to each and every session. Our exhibitor area was 40% larger than its been in previous years, which brings additional revenue that RMOUG depends on for Quarterly Education Workshops, Special interest Groups, the RMOUG Newsletter, SQL>Update and other yearly expenditures. RMOUG couldn’t survive without the contributions of so many different groups, community participation and sponsorship. This user group is powerful because of its community and the support deserves a round of applause for making another Training Days conference a success!

Pingback: RMOUG 2015 Training Days Review - Oracle - Oracle - Toad World