Data Gravity and the Network

The network has often been viewed as “no man’s land” for the DBA- Our tools may identify network latency, but rarely does it go into any details, designating the network outside our jurisdiction.

As we work through data gravity, i.e. the weight of data, the pull of applications, services, etc. to data sources, we have to inspect what connects it to the data and slows it down. Yes, the network.

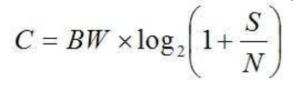

We can’t begin to investigate the network without spending some time on Shannon’s law, also known as Shannon-Hartley Theorem. The equation relates to the maximum capacity (transmission bit rate) that can be achieved over a given channel with certain noise characteristics and bandwidth.

This theorem has been around for quite some time in the telephony world, first patented in 1903 by W.M Minor with the introduction of a concept on how to increase the capacity of transmission lines. Over the years, multiplexing and quantizers were introduced, but the main computation has stayed the same:

- A given communication, (or data) system has a maximum rate of information C known as the channel capacity

- If the transmission information rate R is less than C, then the data transmission in the presence of noise can be made to happen with arbitrarily small error probabilities by using intelligent coding techniques

- To get lower error probabilities, the encoder has to work on longer blocks of signal data. This entails longer delays and higher computational requirements

In layman’s terms- the data is only going to go as fast as it can do so without hitting a error threshold.

As a DBA, we always inspect waits in the form of latency and latency is actually just a measure of time and you should always tune for time or you’re just wasting time. Latency is the closest measure to speed when you compare it to the distance involved between two points, which when discussing data source and application, etc., are your points. This is where it gets interesting. Super low latency networks aren’t necessarily huge bandwidth, such as infiniband, which is common in engineered systems like Exadata. In comparison, standard networks can have much higher volume, but they can’t talk as “fast” on a packet by packet basis. These types of networks compete by providing extensive parallel lanes, but as we know, individual lanes simply won’t be able to.

Now I’m not going to go into the further areas of this theorem, including the Shannon’s Limit, but the network, especially with the introduction of the cloud, has reared its ugly head as the newest bottle neck. There’s a very good reason cloud providers like AWS have come up with Snowmobile. Every cloud project I’ve been on, the network has been a significant impact to its success. My advice to all DBAs is to enhance your knowledge with information about networking. If you didn’t respect your network administrator before, you will after you do a little research… 🙂 It will serve you as you embrace the cloud.

Pingback: Dew Drop - September 22, 2017 (#2567) - Morning Dew