As I continue to work on very large databases, (VLDB), I am exposed to more opportunities to speed up IO. This can involve Oracle’s solution of Exadata or stand alone improvements with options such as SSD, (Solid State Disk) which can offer faster IO performance at a fraction of the price. When this option becomes a reality, there will always be non-DBA’s that advise what would best benefit from the hardware, but to take the time to research what would truly benefit is important for the DBA to perform.

The Just the Facts on Solid State Disk:

There are several types of SSD available:

- Flash memory-based

- DRAM-based

- Cache or Buffer

The SSD can have different types of host interfaces, depending on the main hardware you are interfacing with and/or vendor choices:

- PCI

- Fibre Channel

- ATA, (Serial or Parallel)

- SCSI, (Serial or Parallel)

- USB

Rarely do we get a chance to move entire Terabytes of data onto fast disk, but rather are offered limited, faster disk to utilize for crucial objects that can give us the “most bang for the buck.” Commonly this is due to the price of these specialized and impressive IO read/write drives, but it can also be due to limitations on the hardware they are interfacing with.

As I started working on databases that utilized faster disk, with or without ASM, it became apparent that what these speedy disks were allocated to wasn’t always what SHOULD have been placed in the new location. Where indexes, look up tables and temp tablespace experienced impressive gains vs. the standard disk drives they had formerly resided on, I have been quick to dissuade anyone from placing redo logs on SSD.

I’m going to go through what data, reports and queries that I utilize to decide what should be on fast disk, along with my benchmark findings when I did have the opportunity to create an entire database on Fusion Octal fast disk.

Getting the most out of SSD is all about getting what won’t fit in memory, (SGA and PGA) onto a faster disk. All consistently large, [consistent] read tasks that the database must direct to disk for, but doesn’t write as often to disk, (visualizing batch loads vs. heavy transactional) and ONCE TUNING OPPORTUNITIES HAVE BEEN EXHAUSTED, are excellent choices for research when deciding what should be placed on SSD. This information can be achieved multiple ways as a DBA. AWR/ADDM and ASH reports can provide solid, high level data to direct you in the right direction if you are not as familiar with your data or wish to validate some of what you already know. For those of you that do not have the tuning pack license, then Statspack can do the same. Tracing can offer a detailed output that will tell you about objects that you are often going to slower disk for. OEM can provide graphs that will show IO demands on a heavily “weighted” system, as can other GUI tools in the market.

AWR/Statspack and I/O Wait Indicators

Your group has already decided that IO is an issue and should have verified this in the top 5 wait events that can be seen through AWR or statspack. The snapshots utilized for this examination should be times of heavy IO in the database environment as can be seen in the example Table 1.

Table 1

| Top 5 Timed Events Avg %Total | |||||

| ~~~~~~~~~~~~~~~~~~ wait Call | |||||

| Event | Waits | Time (s) | (ms) | Time | Wait Class |

| —————————— | ———— | ———– | —— | —— | ———- |

| db file sequential read |

979,382 |

36,066 |

37 |

45.1 |

User I/O |

| db file scattered read |

5,083,058 |

22,401 |

4 |

28 |

User I/O |

| Direct path write temp |

13,577 |

17 |

User I/O | ||

| db file parallel write |

464,287 |

5,136 |

11 |

6.4 |

System I/O |

| direct path read temp |

366,956 |

2,671 |

7 |

3.3 |

User I/O |

From here, we inspect our AWR or statspack reports, there is a section that should be inspected first and foremost, referred to as Segments by Physical Reads the output from this section can be seen in Table 2.

Table 2

| Tablespace | Obj. | Physical | ||||

| Owner | Name | Object Name | Type | Reads | %Total | |

| ———- | ———- | ——————– | —– | ———— | ——- | |

| SCHM_OWNR | TBLSPC1_DATA | TBL1_FILE_1 | TABLE |

86,788,592 |

47.87 |

|

| SCHM_OWNR | TBLSPC2_DATA | TBL1_FILE_PK | INDEX |

80,544,192 |

46.59 |

|

| SCHM_OWNR | TBLSPC1_IDX | TBL2_MR_PK | INDEX |

74,742,752 |

45.39 |

|

| SCHM_OWNR | TBLSPC1_IDX | TBL3_M_PK | INDEX |

40,924,576 |

28.43 |

|

| SCHM_OWNR | TBLSPC2_DATA | TBL4 | TABLE |

26,790,464 |

15.52 |

Tuning, Always the First Step

The first step in the process is to inspect I/O issues with large objects. Is there a partitioning strategy that can take the physical reads and IO down for the objects in question? If there is not or there is still a requirement for full scans or large index or partition scans, then you need to look and see what tuning options there are for the code involved. If there is already partitioning in place, is it the right partitioning key and/or is sub-partitioning in order.

Once this process has completed, then inspect performance for physical reads again and verify the objects in question are still a bottleneck for IO. If so, then they may be a valid choice to relocate to a new ASM diskgroup residing on SSD.

Creating a specific ASM disk group for the SSD disk is the obvious choice, as the SSD will not be part of the standard disk groups without performance and rebalance challenges. Once complete, you will then have the new SSD diskgroup available for use.

Inspect the sizes of the objects in left in your “top 5 physical IO objects” and decide what you move over for initial testing. I commonly make a copy and test a copy of the objects against the code to test true performance gains, ensuring that there are no required physical storage required changes as well. ***over what you need for capacity growth estimates. What should you bring over next? Now if we are still using the same reports that are showing above, I would look carefully at what I have available and would start to inspect temp usage as a possible next candidate.

It is important that if you consider temp, that it is in a “controlled” state for your environment. It is not uncommon for many DBA’s to set TEMP to autoextend and not pay attention to temp tablespace usage. I fully advocate the opposite and track temp usage, along with monitor alerts with scripts for anytime any user or process consumes a certain threshold per process on any of my production systems.

Considering the amount of waits on temp read and writes, tuning opportunities may be boundless on hash joins and sorting. Low hanging fruit in these categories will involve looking for “order by’s” that have been left in for insert statements, (not sure how often I’ve seen this, but it’s a very common and an unfortunate occurrence…) In regards to hash joins, there can be examples of wide reporting tables only one or two columns are actually required for the results and the join. A choice of CTAS, (create table as select) of only the columns required for the process, dropping post the join to the second table, can drastically trim time and temp usage for a hash of tables that involve only a few columns on a wide table where an index is a less than efficient answer. This choice allows the performance gain of the hash without the performance hit of swapping to temp when wide tables cause PGA to never be enough.

After tuning temp usage due to large hash joins and sorting outside of PGA, inspect the max temp tablespace required. If this will now fit without impacting capacity planning requirements for the SSD, move the temp tablespace onto the SSD ASM disk group.

Scripts to Inspect IO Usage

There are many scripts that can be written or available on the web and in reports to inspect IO usage. The following is a good example of one:

select

io.cnt Count,

io.event Event,

substr(io.obj,1,20) Object_Name,

io.p1 P1_Value,

f.tablespace_name Tablespace_Name

from

(

select

count(*) cnt,

round(count(*)/(60*60),2) aas,

substr(event,0,15) event,

nvl(o.object_name,decode(CURRENT_OBJ#,-1,0,CURRENT_OBJ#)) obj,

ash.p1,

o.object_type otype

from v$active_session_history ash,

all_objects o

where ( event like 'db file s%' or event like 'direct%' )

and o.object_id (+)= ash.CURRENT_OBJ#

and sample_time > sysdate - 7/(60*24)

group by

substr(event,0,15) ,

CURRENT_OBJ#, o.object_name ,

o.object_type ,

ash.p1

) io,

dba_data_files f

where

f.file_id = io.p1

and f.tablespace_name not like '%RAM%' –-exclude SSD objects

Order by io.cnt desc

/

| COUNT | EVENT | OBJECT_NAME | P1_Value | TABLESPACE_NAME |

| 122 | db file sequent | TBL1_CHAIN | 102 | N_DATA |

| 33 | db file sequent | HH_TBL1_FDX01 | 161 | H_INDX1 |

| 28 | db file sequent | CA_TBL2_PK | 270 | C_INDX |

| 25 | db file sequent | I_TBL3_IDX02 | 225 | I_INDX2 |

| 21 | db file sequent | E_TBL4 | 43 | E_DATA |

| 20 | direct path rea | I_MRG_TBL | 75 | M_DATA |

| 23 | db file scatter | C_TBL3 | 50 | C_DATA |

The above script gives you clear examples of what objects you should point your research to, first indexes, (sequential) and in this case, a look up table, (direct path read).

Building a Database Entirely on SSD

We were given this opportunity recently to test performance gains and decide if budget should be set aside for investing in the hardware to build entire databases on SSD vs. strategic objects within a database. We have a process that takes approximately five days to aggregate a snapshot in time, up to 12TB of data. The goal was to see, could we accomplish this in two days if given all SSD for the database vs. a combination of standard disks on a disk array and SSD for high read/write data.

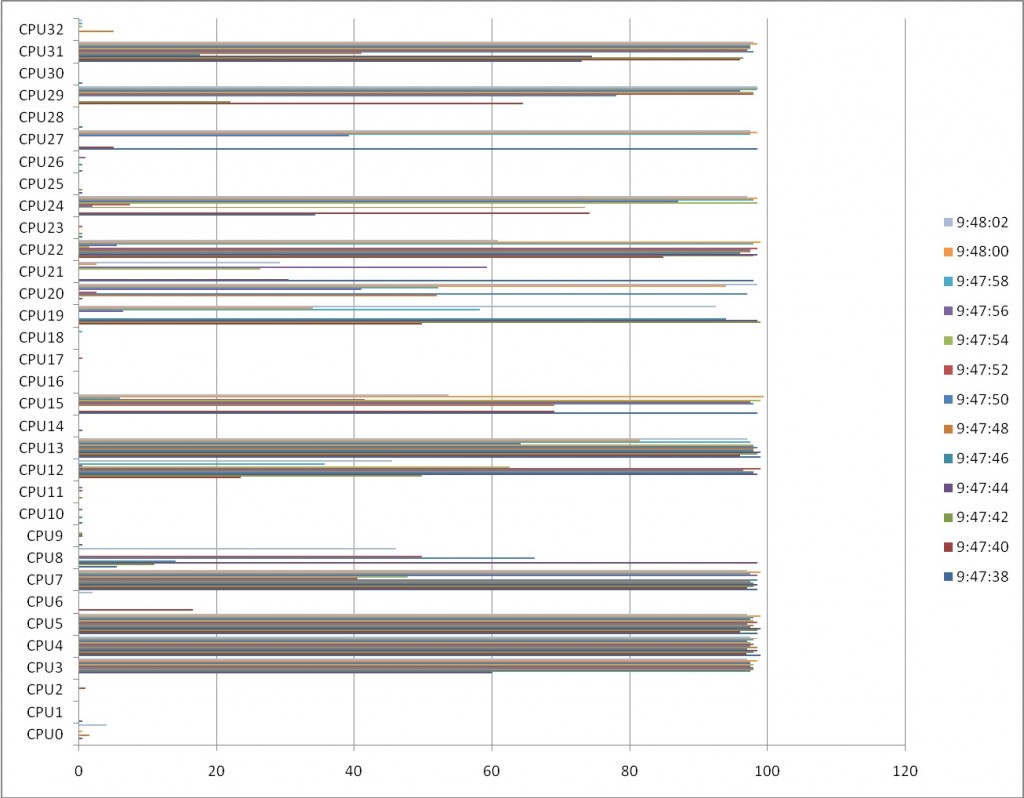

This sounds like a slam dunk, but it is more challenging than one might think. There are small things to that have to be updated in the database, such as system statistics in 10g to ensure the database knows fully the gift you have granted it, but then you may also need to make significant logical changes to take advantage of the hardware due to limitations in CPU and memory per process. The build was on a server that utilized hyper-threading and some of the “performance settings” actually appeared to work against the database vs. the lesser setting that might stripe the CPU usage more efficiently. The graph below show the hits against the first 32 of “hyper-threaded” 64 CPU’s:

Figure 1

This graph in Figure 1 only shows CPU usage over a small snapshot of time, but over long intervals, it showed the same differing data vs. SAR or other reports from the Admin side- the database continued to hit the same CPU’s over and over, leaving other CPU’s untouched for extended periods of time. This did not bode well for the database performance, high read/write capability or not.

The build time improvements were impressive, but the one thing that must be included is that the improvement in performance was not just a hardware improvement step. There was first the additional hardware and then a tuning process at the database level to ensure the processes were able to achieve the best performance the solid state disk offered it, (comparison of columns New Run Time against the Final Run Time in Figure 2.)

| Process Step | Standard Disk/SSD | Total Min. | SSD Without Tuning | New Run Time | Initial Perf. Gain | SSD With Tuning | Final Run Time | Total Perf. Gain |

| DIM Table 1 CTAS | 4 HOURS 20 MINUTES 27 SECS | 260 min | 3 HOURS 38 MINUTES 24 SECS | 218 min |

19% |

2 HOURS 43 MINUTES 45 SECS | 164 min |

58% |

| CTAS Table 2 | 4 HOURS 23 MINUTES 11 SECS | 263 min | 0 HOURS 16 MINUTES 2 SECS | 16 min | 16 Times Perf | 0 HOURS 19 MINUTES 8 SECS | No Tuning | N/A |

| CTAS Table 3 | 1 HOURS 29 MINUTES 21 SECS | 89 min | 0 HOURS 44 MINUTES 27 SECS | 44 min | 2 Times Perf. | 0 HOURS 57 MINUTES 19 SECS | No Tuning | N/A |

| CTAS Table 4 | 2 HOURS 55 MINUTES 58 SECS | 175 min | 0 HOURS 42 MINUTES 16 SECS | 42 min | 4 Times Perf. | 0 HOURS 47 MINUTES 55 SECS | No Tuning | N/A |

| CTAS Table 5 | 10 HOURS 7 MINUTES 41 SECS | 607 min | 1 HOURS 50 MINUTES 7 SECS | 110 min | 6 Times Perf. | 1 HOURS 42 MINUTES 6 SECS | No Tuning | N/A |

| CTAS Table 6 | 11 HOURS 32 MINUTES 40 SECS | 692 min | 4 HOURS 51 MINUTES 17 SECS | 291 min | 2 Times Perf. | 5 HOURS 9 MINUTES 26 SECS | No Tuning | N/A |

| Multiple Table Aggregation | 25 HOURS 15 MINUTES 3 SECS | 1515 min | 9 HOURS 58 MINUTES 1 SECS | 598 min | 3 Times Perf. | 5 HOURS 16 MINUTES 31 SECS | 316 min | 5 Times Perf. |

| Summary Table 1 Agg. | 25 HOURS 24 MINUTES 35 SECS | 1524 min | 10 HOURS 0 MINUTES 20 SECS | 600 min | 3 Times Perf. | 5 HOURS 18 MINUTES 14 SECS | 318 min | 5 Times Perf. |

| Summary Table 2 Agg. | 25 HOURS 23 MINUTES 56 SECS | 1523 min | 10 HOURS 7 MINUTES 22 SECS | 607 min | 3 Times Perf. | 5 HOURS 25 MINUTES 54 SECS | 325 min | 5 Times Perf. |

| Index Creation Table 1 | 1 HOURS 16 MINUTES 33 SECS | 76 min | 0 HOURS 53 MINUTES 42 SECS | 54 min |

39% |

0 HOURS 53 MINUTES 14 SECS | No Tuning | N/A |

| Index Creation Table 2 | 1 HOURS 22 MINUTES 55 SECS | 82 min | 0 HOURS 59 MINUTES 55 SECS | 60 min |

28% |

0 HOURS 59 MINUTES 6 SECS | No Tuning | N/A |

| CTAS Aggr Table 3 | 6 HOURS 36 MINUTES 20 SECS | 396 min | 3 HOURS 21 MINUTES 18 SECS | 201 min |

50% |

3 HOURS 13 MINUTES 38 SECS | No Tuning | N/A |

| Index Creation Table 3 | 0 HOURS 52 MINUTES 2 SECS | 52 min | 0 HOURS 40 MINUTES 3 SECS | 40 min |

24% |

0 HOURS 48 MINUTES 15 SECS | No Tuning | N/A |

| CTAS Aggr. Table 4 | 2 HOURS 41 MINUTES 13 SECS | 161 min | 1 HOURS 32 MINUTES 8 SECS | 92 min |

43% |

1 HOURS 28 MINUTES 25 SECS | No Tuning | N/A |

| CTAS Aggr Table 5 | 3 HOURS 46 MINUTES 59 SECS | 226 min | 2 HOURS 58 MINUTES 29 SECS | 179 min |

21% |

2 HOURS 55 MINUTES 20 SECS | No Tuning | N/A |

| CTAS Aggr. Table 6 | 0 HOURS 51 MINUTES 27 SECS | 51 min | 0 HOURS 36 MINUTES 46 SECS | 37 min |

28% |

0 HOURS 34 MINUTES 33 SECS | No Tuning | N/A |

| Insert to Table 6 | 0 HOURS 5 MINUTES 24 SECS | 5 min | 0 HOURS 5 MINUTES 6 SECS | 5 min | NONE | 0 HOURS 4 MINUTES 52 SECS | 5 min | NONE |

| Update to Table 6 | 26 HOURS 40 MINUTES 41 SECS | 1640 min | 25 HOURS 9 MINUTES 52 SECS | 1510 min |

8% |

17 HOURS 44 MINUTES 2 SECS | 1084 min |

44% |

| CTAS Table 7 | 1 HOURS 1 MINUTES 48 SECS | 61 min | 0 HOURS 7 MINUTES 43 SECS | 8 min | 13 Times Perf. | 0 HOURS 6 MINUTES 37 SECS | No Tuning | N/A |

| CTAS Aggr Table 8 | 0 HOURS 28 MINUTES 31 SECS | 28 min | 0 HOURS 22 MINUTES 12 SECS | 22 min |

22% |

0 HOURS 19 MINUTES 25 SECS | No Tuning | N/A |

| CTAS Mod TBLS 9/10 | 1 HOURS 42 MINUTES 36 SECS | 102 min | 1 HOURS 42 MINUTES 22 SECS | 102 min | NONE | 1 HOURS 39 MINUTES 25 SECS | No Tuning | N/A |

| CTAS Table Aggr. 11 | 2 HOURS 26 MINUTES 58 SECS | 147 min | 1 HOURS 29 MINUTES 53 SECS | 90 min |

49% |

1 HOURS 24 MINUTES 42 SECS | No Tuning | N/A |

| CTAS Aggr. Table 12 | 7 HOURS 24 MINUTES 44 SECS | 445 min | 6 HOURS 7 MINUTES 48 SECS | 368 min |

18% |

6 HOURS 6 MINUTES 40 SECS | No Tuning | N/A |

| CTAS Aggr. Table 13 | 6 HOURS 47 MINUTES 31 SECS | 408 min | 4 HOURS 38 MINUTES 1 SECS | 278 min |

32% |

5 HOURS 5 MINUTES 32 SECS | No Tuning | N/A |

| CTAS Aggr. Table 14 | 25 HOURS 23 MINUTES 32 SECS | 1524 min | 10 HOURS 9 MINUTES 51 SECS | 610 min | 3 Times Perf. | 5 HOURS 27 MINUTES 17 SECS | 327 min | 5 Times Perf. |

| CTAS Aggr. Table 15 | 1 HOURS 21 MINUTES 59 SECS | 82 min | 0 HOURS 22 MINUTES 49 SECS | 23 min |

65% |

0 HOURS 4 MINUTES 33 SECS | 4 min | 20 Times Perf. |

| Update to Table 13 | 0 HOURS 12 MINUTES 45 SECS | 13 min | 0 HOURS 49 MINUTES 58 SECS | 50 min | 3 Times LOSS!! | 0 HOURS 1 MINUTES 22 SECS | 1 min | 9 Times Perf. |

Figure 2

I must note that what challenged us in unresolved issues were waits on CPU due to hyper-threaded CPU issues.

Tuning involved for the third columns time elapsed involved the following:

- Bind variable additions

- Literal additions where bind peeking was an issue.

- A change from ASSM, (Automatic Segment Space Management) to manual segment space management where freelists could be set at the object level, (dynamically allocated freelists were not able to adjust quickly enough for some of the load processes…)

- Changes to initial transactions, percent free and parallel that made sense, (upping it for some, downgrading it for others that did not work with the partitioning or a need for partitioning…)

Inspecting I/O by SQL_ID

This script, (adopted from Tim Gorman’s sqlhistory.sql from, www.evdbt.com) does a wonderful job of pulling a clean, clear picture of what physical and logical I/O is occurring in a single SQL_ID, seen here in Table 3 :

Table 3

| +————————————————————————————————–+ | |||||||||

| Plan HV Min Snap Max Snap Execs LIO PIO CPU Elapsed | |||||||||

| +————————————————————————————————–+ | |||||||||

| 1766271350 659 659 1 593,134,283 12,961,814 14,657.45 15,067.05 | |||||||||

| +————————————————————————————————–+ | |||||||||

| ========== PHV = 1766271350========== | |||||||||

| First seen from “07/15/11 13:00:31” (snap #659) | |||||||||

| Last seen from “07/15/11 13:00:31” (snap #659) | |||||||||

| Execs LIO PIO CPU Elapsed | |||||||||

| ===== === === === ======= | |||||||||

| 1 593,134,283 12,961,814 14,657.45 15,067.05 | |||||||||

| Plan hash value: 1766271350 | |||||||||

| TQ | IN-OUT | PQ Distrib | ||||||

|

0 |

CREATE TABLE STATEMENT | 1543M(100) | ||||||

|

1 |

PX COORDINATOR | |||||||

|

2 |

PX SEND QC (RANDOM) | :TQ10001 | 464M | 397G | 4128K (7) | |||

| Q1,01 | P->S | QC (RAND) | ||||||

|

3 |

LOAD AS SELECT | |||||||

| Q1,01 | PCWP | |||||||

|

4 |

PX RECEIVE | 464M | 397G | 4128K (7) | ||||

| Q1,01 | PCWP | |||||||

|

5 |

PX SEND RANDOM LOCAL | :TQ10000 | 464M | 397G | 4128K (7) | |||

| Q1,00 | P->P | RANDOM LOCA | ||||||

|

6 |

PX PARTITION LIST ALL | 464M | 397G | 4128K (7) |

1 |

1000 |

||

| Q1,00 | PCWC | |||||||

|

7 |

HASH JOIN RIGHT OUTER | 464M | 397G | 14G | 4128K (7) | |||

| Q1,00 | PCWP | |||||||

|

8 |

TABLE ACCESS FULL | HDN_TBL | 231M | 112G | 576K (22) |

1 |

1000 |

|

| Q1,00 | PCWP | |||||||

|

9 |

HASH JOIN RIGHT OUTER | 464M | 171G | 6967M | 1551K (7) | |||

| Q1,00 | PCWP | |||||||

|

10 |

TABLE ACCESS FULL | HD_TBL | 310M | 50G | 144K (34) |

1 |

1000 |

|

| Q1,00 | PCWP | |||||||

|

11 |

TABLE ACCESS FULL | H_TBL | 464M | 95G | 339K (13) |

1 |

1000 |

|

| Q1,00 | PCWP |

| Summary Execution Statistics Over Time | |||||||||

| Avg Avg | |||||||||

| Snapshot Avg LIO Avg PIO CPU (secs) Elapsed (secs) | |||||||||

| Time Execs Per Exec Per Exec Per Exec Per Exec | |||||||||

| ———— ——– ——————- ——————- ——————- ——————- | |||||||||

| 15-JUL 13:00 1 593,134,283.00 12,961,814.00 14,657.45 15,067.05 | |||||||||

| ——– ——————- ——————- ——————- ——————- | |||||||||

| avg 593,134,283.00 12,961,814.00 14,657.45 15,067.05 | |||||||||

| sum 1 | |||||||||

| Per-Plan Execution Statistics Over Time | |||||||||

| Avg Avg | |||||||||

| Plan Snapshot Avg LIO Avg PIO CPU (secs) Elapsed (secs) | |||||||||

| Hash Value Time Execs Per Exec Per Exec Per Exec Per Exec | |||||||||

| ———- ———— ——– ——————- ——————- ——————- ——————- | |||||||||

| 1766271350 15-JUL 13:00 1 593,134,283.00 12,961,814.00 14,657.45 15,067.05 | |||||||||

| ********** ——– ——————- ——————- ——————- ——————- | |||||||||

| avg 593,134,283.00 12,961,814.00 14,657.45 15,067.05 | |||||||||

| sum 1 | |||||||||

+—————————————————————————————————————————

This report clearly shows the amount of logical vs. physical I/O coming from the statement in question. This gives the DBA a clear indicator if any object in the poor performing process would benefit a move to SSD or if tuning is in order to eliminate the I/O performance challenge. A combination of both may be chosen, as there are multiple right outer hash-joins which clearly show as the performance hit in the time elapsed and in the temp tablespace usage/significant I/O categories, (note that the process needs to scan ALL the partitions for the objects in question…)

SSD and Forced Hash Joins on Indexes

When a database design is impacted by the front-end tool required to present data in a proper format, such as Business Analytics Software, the price can be high to the DBA who has to manage resource usage. Many times the data must be presented in a very flat, wide format and requires a large amount of data pulled across a network interface. This can be in anywhere from a couple 100GB’s to multiple Terabytes. When you are the DBA looking at ways to increase performance when logical performance tuning is limited, solid state disk can offer you gains not offered anywhere else.

Business Analytics Software often will query a few 100GB to 1TB objects, hash join and then perform an order by. For the DBA, to create an index, then using a hint to force a hash join between an index and the large table can improve performance greatly, but to move the index onto SSD can increase the hash and limit the requirements for SSD at the same time.

create table new_ordertmp_tbl compress pctfree 0 tablespace data_1 as

SELECT /*+ USE_HASH(t,i) INDEX_FFS(i,I_TBL2_IDX) INDEX(t,CT1) */

cast(MOD(t.i_id, 1000) as number(3)) im_key

, LEAST(ROUND(MONTHS_BETWEEN(:b1, t.t_dt) + .4999 ), 48) AS r_key , t.i_id AS ib_id

, t.m_id, t.t_dt, cast(:b5 as varchar2(5)) m_cd, FIRST_VALUE(i.ib_id) OVER(

PARTITION BY t.i_id, t.m_id, t.t_nbr,t.t_dt ORDER BY t.t_dt ASC

) AS ibcid, t.t_nbr, cast(TO_NUMBER(TO_CHAR(FIRST_VALUE(t.t_dt) OVER(

PARTITION BY t.i_id, t.m_id, t.t_nbr,t.t_dt ORDER BY t.t_dt ASC

), ''YYYYMMDD'')) as number(8)) AS d_id,

FIRST_VALUE(DECODE(t.oct_cd, NULL, 'O','W', 'O', 'E', 'O', 'R', 'R', 'F')

) OVER(PARTITION BY t.d_id, t.m_id, t.t_nbr,t.t_dt ORDER BY t.t_dt ASC

) AS tct_cd, SUM(t.ot_amt) OVER(

PARTITION BY t.i_id, t.m_id, t.t_nbr,t.t_dt) AS ot_amt

, FIRST_VALUE(NVL(t.pmt_cd, ''U'')) OVER(

PARTITION BY t.i_id, t.m_id, t.t_nbr,t.t_dt ORDER BY t.t_dt ASC

) AS pmt_cd, SUM(t.i_cnt) OVER(

PARTITION BY t.i_id, t.m_id, t.t_nbr,t.t_dt) AS i_cnt

, FIRST_VALUE(t.cs_cd IGNORE NULLS) OVER(

PARTITION BY t.i_id, t.m_id, t.t_nbr,t.t_dt ORDER BY t.t_dt ASC

) AS cs_cd, FIRST_VALUE(t.cc_cd IGNORE NULLS) OVER(

PARTITION BY t.i_id, t.m_id, t.t_nbr,t.t_dt ORDER BY t.t_dt ASC

) AS cc_cd, t.oct_cd

FROM CT_TBL1 t, I_TBL2 i

WHERE t.m_id = :b5

AND t.t_dt BETWEEN :b1 AND :b2 AND i.ibid = t.i_id

order by i.i_id;

Object Sizes:

CT_TBL1, partition 7= 800GB

I_TBL2=1.2TB

While the I_TBL2_IDX, the index created on the I_TBL2 and possessing only the columns required for this routinely run query and leading with the I_ID column, is only 200GB.

Execution Plan for Query:

Table 4

| Description | Object | Cost | Cardinality | Bytes | PartitionID |

| SELECT STATEMENT, GOAL = ALL_ROWS |

107587 |

16356015 |

10079496 |

||

| WINDOW SORT |

107587 |

16356015 |

10079496 |

||

| WINDOW BUFFER |

107587 |

16356015 |

10079496 |

||

| WINDOW SORT |

107587 |

16356015 |

10079496 |

||

| WINDOW SORT |

107587 |

16356015 |

10079496 |

||

| FILTER | |||||

| HASH JOIN |

107371 |

16356015 |

10079496 |

||

| PARTITION LIST SINGLE |

330 |

16356015 |

8166868 |

7 |

|

| TABLE ACCESS FULL | CT_TBL1 |

330 |

16356015 |

8166868 |

7 |

| INDEX FAST FULL SCAN | I_TBL2_IDX |

23597 |

6399400008 |

2120000 |

The hash join is thus, decreased to a total size of 1TB, vs. the much larger size it would have been if the hash join would have been run against the table. By running it with the index residing on solid state disks, the actual performance to create the table from the CTAS in question was increased by 12 fold.

What does the IO look like on the solid state disk vs. the old standard disk? The differences are startling when viewed through iostat, (table 5).

Table 5

| Device: | rsec/s | wsec/s | avgqu-sz | %util |

| Raid 5 Disk |

55200 |

30224 |

215.72 |

84.03 |

| SSD |

52394.67 |

41306 |

223.74 |

7.49 |

As you can see, the IO is much less impacting on the SSD than the standard disk.

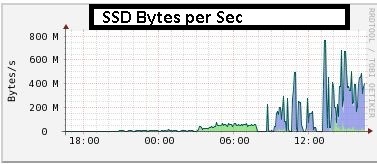

Via graphs, such as from Cacti, the differences in IO throughput can be seen for standard disk, (figure 3) and solid state disk, (figure 4.)

Figure 3

Figure 4

Summary

Solid state disk is here to stay and often will be seen as a “silver bullet” for production I/O issues. The goal of the DBA is to utilize this technology in a way that does not replace logical tuning and focus instead, in ways that may actually support positive changes enforcing both physical and logical tuning to get the most out of the new hardware available on the market today.

One comment on “Solid Choices for Oracle Tuning on Solid State Disk”

Comments are closed.