What happens with I/O requests over NFS and more specifically with Oracle? How does NFS affect performance and what things can be done to improve performance?

What happens at the TCP layer when I request with dd an 8K chunk of data off an NFS mounted file system?

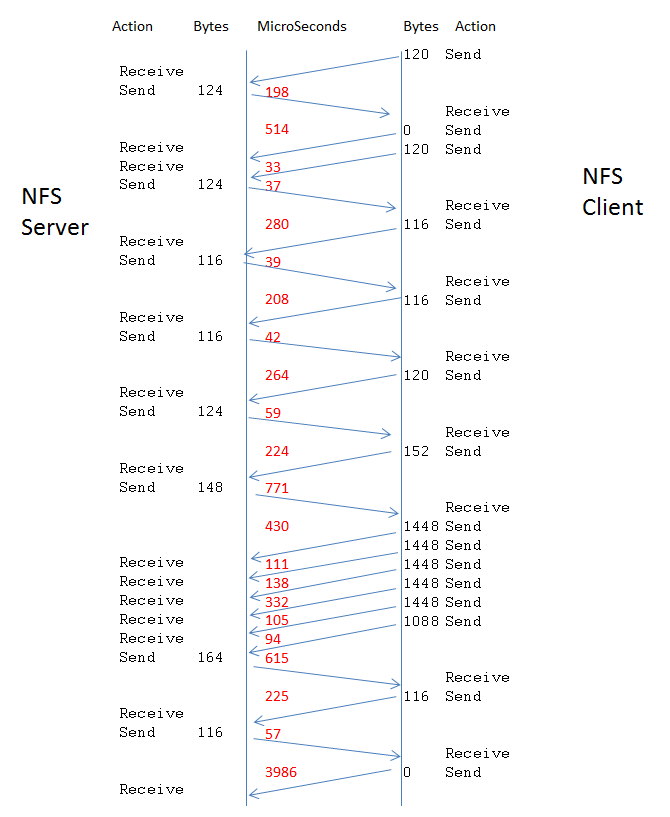

Here is one example:

I do a

dd if=/dev/zero of=foo bs=8k count=1

where my output file is on an NFS mount, I see the TCP send and receives from NFS server to client as:

(the code is in dtrace and runs on the server side, see tcp.d for the code)

There is a lot of activity in this simple request for 8K. What is all the communication? Frankly at this point, I’m not sure. I haven’t looked at the contents of the packets but I’d guess some of it has to do with getting file attributes. Maybe we’ll go into those details in future postings.

For now what I’m interested in is throughput and latency and for the latency, figuring out where the time is being spent.

I most interested in latency. I’m interested in what happens to a query’s response time when it reads I/O off of NFS as opposed to the same disks without NFS. Most NFS blogs seem to address throughput.

Before we jump into the actually network stack and OS operations latencies, let’s look at the physics of the data transfer.

If we are on a 1Ge we can do up toin theory 122MB/s but actually the maximum is more like 115 MB/s and for most systems 100 MB/s is good) , thus

100 MB/s

100 KB/ms

1 KB per 0.01ms (ie 10us)

15us ( 0.015 ms) to transfer a 1500 byte network packet (ie MTU or maximum transfer unit)

a 1500 byte transfer has IP framing and only transfers 1448 bytes of actual data

so an 8K block from Oracle will take 5.7 packets which rounds off to 6 packets

Each packet takes 15us, so 6 packets for 8K takes 90us (interesting to note this part of the transfer goes down to 9us on 10Ge – if all worked perfectly )

Now a well tuned 8K transfer takes about 350us (from testing, more on this later) , so where is the other ~ 260 us come from? (260us+90us transfer time = 350us total latency)

Well if I look at the above diagram, the total transfer time takes 4776 us ( or 4.7ms) from start to finish, but this transfer does a lot of set up.

The actual 8K transfer (5 x 1448 byte packets plus the 1088 byte packet ) takes 780 us or about twice as long as optimal.

Comparing good throughput to bad throughput

I don’t have an answer to the following TCP performance difference between two machines, but thought I’d post about what I’ve seen as I think it’s interesting, and maybe someone else has ideas.

I’m running netio (http://freshmeat.net/projects/netio/) on one machine (opensolaris) and contacting two different Linux machines (both on 2.6.18-128.el5 ), machine A and machine B.

Machine A has a network throughput of 10MB/sec with netio and machine B 100MB/sec with netio. Netio is set to send 32K chunks:

Linux machine: netio -s -b 32k -t -p 1234

Opensolaris: netio -b 32k -t -p 1234 linuxmachine

On the opensolaris machine I used dtrace to trace the connections. From dtrace, all the interactions TCP settings look the same – same windows sizes on the receive and send, same ssthresh, same congestion window sizes, but the slow machine is sending an ACK for every 2 or 3 receives whereas the fast machine is sending an ACK every 12 receives.

All three machines are on the same switch.

Here is the Dtrace output:

Fast Machine:

delta send recd (us) bytes bytes swnd snd_ws rwnd rcv_ws cwnd ssthresh 122 1448 195200 7 131768 2 128872 1073725440 37 1448 195200 7 131768 2 128872 1073725440 20 1448 195200 7 131768 2 128872 1073725440 18 1448 195200 7 131768 2 128872 1073725440 18 1448 195200 7 131768 2 128872 1073725440 18 1448 195200 7 131768 2 128872 1073725440 18 1448 195200 7 131768 2 128872 1073725440 19 1448 195200 7 131768 2 128872 1073725440 18 1448 195200 7 131768 2 128872 1073725440 18 1448 195200 7 131768 2 128872 1073725440 57 1448 195200 7 131768 2 128872 1073725440 171 1448 195200 7 131768 2 128872 1073725440 29 912 195200 7 131768 2 128872 1073725440 30 / 0 195200 7 131768 2 128872 1073725440

slow machine:

delta send recd (us) bytes bytes swnd snd_ws rwnd rcv_ws cwnd ssthresh 161 / 0 195200 7 131768 2 127424 1073725440 52 1448 195200 7 131768 2 128872 1073725440 33 1448 195200 7 131768 2 128872 1073725440 11 1448 195200 7 131768 2 128872 1073725440 143 / 0 195200 7 131768 2 128872 1073725440 46 1448 195200 7 131768 2 130320 1073725440 31 1448 195200 7 131768 2 130320 1073725440 11 1448 195200 7 131768 2 130320 1073725440 157 / 0 195200 7 131768 2 130320 1073725440 46 1448 195200 7 131768 2 131768 1073725440 18 1448 195200 7 131768 2 131768 1073725440

Dtrace code

dtrace: 130717 drops on CPU 0

#!/usr/sbin/dtrace -s

#pragma D option quiet

#pragma D option defaultargs

inline int TICKS=$1;

inline string ADDR=$$2;

dtrace:::BEGIN

{

TIMER = ( TICKS != NULL ) ? TICKS : 1 ;

ticks = TIMER;

TITLE = 10;

title = 0;

walltime=timestamp;

printf("starting up ...n");

}

tcp:::send

/ ( args[2]->ip_daddr == ADDR || ADDR == NULL ) /

{

nfs[args[1]->cs_cid]=1; /* this is an NFS thread */

delta= timestamp-walltime;

walltime=timestamp;

printf("%6d %8d %8s %8d %8d %8d %8d %8d %12d %12d %12d %8d %8d %d n",

delta/1000,

args[2]->ip_plength - args[4]->tcp_offset,

"",

args[3]->tcps_swnd,

args[3]->tcps_snd_ws,

args[3]->tcps_rwnd,

args[3]->tcps_rcv_ws,

args[3]->tcps_cwnd,

args[3]->tcps_cwnd_ssthresh,

args[3]->tcps_sack_fack,

args[3]->tcps_sack_snxt,

args[3]->tcps_rto,

args[3]->tcps_mss,

args[3]->tcps_retransmit

);

flag=0;

title--;

}

tcp:::receive

/ ( args[2]->ip_saddr == ADDR || ADDR == NULL ) && nfs[args[1]->cs_cid] /

{

delta=timestamp-walltime;

walltime=timestamp;

printf("%6d %8s / %8d %8d %8d %8d %8d %8d %12d %12d %12d %8d %8d %d n",

delta/1000,

"",

args[2]->ip_plength - args[4]->tcp_offset,

args[3]->tcps_swnd,

args[3]->tcps_snd_ws,

args[3]->tcps_rwnd,

args[3]->tcps_rcv_ws,

args[3]->tcps_cwnd,

args[3]->tcps_cwnd_ssthresh,

args[3]->tcps_sack_fack,

args[3]->tcps_sack_snxt,

args[3]->tcps_rto,

args[3]->tcps_mss,

args[3]->tcps_retransmit

);

flag=0;

title--;

}

Followup , since I did the above, I have since changed the dtrace code to include the number of unacknowledged bytes and it turns out the slow code does run up it’s unacknowleged bytes until it hits the congestion window, where as the fast machine never hits it’s congestion window:

unack unack delta bytes bytes send receive cong ssthresh bytes byte us sent recieved window window window sent recieved 139760 0 31 1448 195200 131768 144800 1073725440 139760 0 33 1448 195200 131768 144800 1073725440 144104 0 29 1448 195200 131768 146248 1073725440 145552 0 31 / 0 195200 131768 144800 1073725440 145552 0 41 1448 195200 131768 147696 1073725440 147000 0 30 / 0 195200 131768 144800 1073725440 147000 0 22 1448 195200 131768 76744 72400 147000 0 28 / 0 195200 131768 76744 72400 147000 0 18 1448 195200 131768 76744 72400 147000 0 26 / 0 195200 131768 76744 72400 147000 0 17 1448 195200 131768 76744 72400 147000 0 27 / 0 195200 131768 76744 72400 147000 0 18 1448 195200 131768 76744 72400 147000 0 56 / 0 195200 131768 76744 72400 147000 0 22 1448 195200 131768 76744 72400

dtrace code:

#!/usr/sbin/dtrace -s

#pragma D option quiet

#pragma D option defaultargs

inline int TICKS=$1;

inline string ADDR=$$2;

tcp:::send, tcp:::receive

/ ( args[2]->ip_daddr == ADDR || ADDR == NULL ) /

{

nfs[args[1]->cs_cid]=1; /* this is an NFS thread */

delta= timestamp-walltime;

walltime=timestamp;

printf("%6d %6d %6d %8d %8s %8d %8d %8d %8d %8d %12d %12d %12d %8d %8d %d n",

args[3]->tcps_snxt - args[3]->tcps_suna ,

args[3]->tcps_rnxt - args[3]->tcps_rack,

delta/1000,

args[2]->ip_plength - args[4]->tcp_offset,

"",

args[3]->tcps_swnd,

args[3]->tcps_snd_ws,

args[3]->tcps_rwnd,

args[3]->tcps_rcv_ws,

args[3]->tcps_cwnd,

args[3]->tcps_cwnd_ssthresh,

args[3]->tcps_sack_fack,

args[3]->tcps_sack_snxt,

args[3]->tcps_rto,

args[3]->tcps_mss,

args[3]->tcps_retransmit

);

}

tcp:::receive

/ ( args[2]->ip_saddr == ADDR || ADDR == NULL ) && nfs[args[1]->cs_cid] /

{

delta=timestamp-walltime;

walltime=timestamp;

printf("%6d %6d %6d %8s / %-8d %8d %8d %8d %8d %8d %12d %12d %12d %8d %8d %d n",

args[3]->tcps_snxt - args[3]->tcps_suna ,

args[3]->tcps_rnxt - args[3]->tcps_rack,

delta/1000,

"",

args[2]->ip_plength - args[4]->tcp_offset,

args[3]->tcps_swnd,

args[3]->tcps_snd_ws,

args[3]->tcps_rwnd,

args[3]->tcps_rcv_ws,

args[3]->tcps_cwnd,

args[3]->tcps_cwnd_ssthresh,

args[3]->tcps_sack_fack,

args[3]->tcps_sack_snxt,

args[3]->tcps_rto,

args[3]->tcps_mss,

args[3]->tcps_retransmit

);

}

So the fact that it looked like the slow machine was acknowledging every second or third send was due to the fact that the receiver was already behind on acknowledging previous packets.

Now the question remains is why did the receiver get so far behind on one machine and not the other.

I’ve check the rmem values on both machines and set the to the same:

I showed some code that displayed the send and receive sizes and times over TCP on Solaris 10 with the dtrace command (see tcp.d for the code). I took this code on another machine and got errors like

“dtrace: error on enabled probe ID 29 (ID 5461: tcp:ip:tcp_input_data:receive): invalid alignment (0xffffff516eb5e83a) in predicate at DIF offset 136″

Not quite sure why this was happening but by a process of elimination I found that accessing args[4] in tcp:::receive caused these errors.

Fortunately many of the values in args[4] are found in args[3] as well.

To find arguments to tcp:::receive , first run the following command (or alternatively look the TCP probes up on the wiki athttp://wikis.sun.com/display/DTrace/tcp+Provider)

( for command line flags check out http://compute.cnr.berkeley.edu/cgi-bin/man-cgi?dtrace+7

- -l = list instead of enable probes

- -n = Specify probe name to trace or list

- -v = Set verbose mode

i.e. list the verbose information about the probes named and don’t enable these probes, just list them)

$ dtrace -lvn tcp:::receive

5473 tcp ip tcp_output send

Argument Types

args[0]: pktinfo_t *

args[1]: csinfo_t *

args[2]: ipinfo_t *

args[3]: tcpsinfo_t *

args[4]: tcpinfo_t *

(by the way, there are a number of probes that match tcp:::receive, but they all have the same arguments, I didn’t notice all these different tcp:::receive until I actually ran the above command. Before running the command I’d depended on the wiki. Now I’m wondering what the difference is between some of these tcp:::receive and tcp:::send probes )

After finding the args for a probe, you can look up the definition of the structs at http://cvs.opensolaris.org/source/

tcp:::send and tcp:::receive both have the same arguments

- args[3] is tcpsinfo_t

- args[4] is tcpinfo_t

Looking up the structs at http://cvs.opensolaris.org/source/, I find the contents of the structs as follows:

tcpsinfo_t ( args[3] )

111 typedef struct tcpsinfo {

112 uintptr_t tcps_addr;

113 int tcps_local; /* is delivered locally, boolean */

114 int tcps_active; /* active open (from here), boolean */

115 uint16_t tcps_lport; /* local port */

116 uint16_t tcps_rport; /* remote port */

117 string tcps_laddr; /* local address, as a string */

118 string tcps_raddr; /* remote address, as a string */

119 int32_t tcps_state; /* TCP state */

120 uint32_t tcps_iss; /* Initial sequence # sent */

121 uint32_t tcps_suna; /* sequence # sent but unacked */

122 uint32_t tcps_snxt; /* next sequence # to send */

123 uint32_t tcps_rack; /* sequence # we have acked */

124 uint32_t tcps_rnxt; /* next sequence # expected */

125 uint32_t tcps_swnd; /* send window size */

126 int32_t tcps_snd_ws; /* send window scaling */

127 uint32_t tcps_rwnd; /* receive window size */

128 int32_t tcps_rcv_ws; /* receive window scaling */

129 uint32_t tcps_cwnd; /* congestion window */

130 uint32_t tcps_cwnd_ssthresh; /* threshold for congestion avoidance */

131 uint32_t tcps_sack_fack; /* SACK sequence # we have acked */

132 uint32_t tcps_sack_snxt; /* next SACK seq # for retransmission */

133 uint32_t tcps_rto; /* round-trip timeout, msec */

134 uint32_t tcps_mss; /* max segment size */

135 int tcps_retransmit; /* retransmit send event, boolean */

tcpinfo_t (args[4])

95 typedef struct tcpinfo {

96 uint16_t tcp_sport; /* source port */

97 uint16_t tcp_dport; /* destination port */

98 uint32_t tcp_seq; /* sequence number */

99 uint32_t tcp_ack; /* acknowledgment number */

100 uint8_t tcp_offset; /* data offset, in bytes */

101 uint8_t tcp_flags; /* flags */

102 uint16_t tcp_window; /* window size */

103 uint16_t tcp_checksum; /* checksum */

104 uint16_t tcp_urgent; /* urgent data pointer */

105 tcph_t *tcp_hdr; /* raw TCP header */

106 } tcpinfo_t;

In the script I accessed the TCP seq and ack in arg[4], which was giving me errors in tcp:::receive, so I just switch these for the equivalents in arg[3]. Now I’m not exactly clear on the equivalence, but it seems

- args[4]->tcp_seq ?= args[3]->tcps_suna

- args[4]->tcp_ack ?= args[3]->tcps_rack

The ack=rack seems solid as tcps_rack = “highest sequence number for which we have received and sent an acknowledgement”.

The seq=tcps_suna is less clear to me as tcps_suna = “lowest sequence number for which we have sent data but not received acknowledgement”

But for my purposes, these distinctions might be unimportant as I’ve stopped looking at seq and ack and now look at outstanding unacknowledge bytes on the receiver and sender, which is

- tcps_snxt-tcps_suna ” gives the number of bytes pending acknowledgement “

- tcps_rsnxt – tcps_rack ” gives the number of bytes we have received but not yet acknowledged”

but more about that later. Let’s look at the new version of the program and the output

#!/usr/sbin/dtrace -s

#pragma D option defaultargs

#pragma D option quiet

inline string ADDR=$$1;

dtrace:::BEGIN

{ TITLE = 10;

title = 0;

walltime=timestamp;

printf("starting up ...n");

}

tcp:::send, tcp:::receive

/ title == 0 /

{ printf("%9s %8s %6s %8s %8s %12s %s n",

"delta" ,

"cid" ,

"pid" ,

"send" ,

"recd" ,

"seq",

"ack"

);

title=TITLE;

}

tcp:::send

/ ( args[3]->tcps_raddr == ADDR || ADDR == NULL ) /

{ nfs[args[1]->cs_cid]=1; /* this is an NFS thread */

delta= timestamp-walltime;

walltime=timestamp;

printf("%9d %8d %6d %8d --> %8s %8d %8d %12s > %s n",

delta/1000,

args[1]->cs_cid % 100000,

args[1]->cs_pid ,

args[2]->ip_plength - args[4]->tcp_offset,

"",

args[4]->tcp_seq -

args[3]->tcps_suna ,

args[4]->tcp_ack -

args[3]->tcps_rack ,

args[3]->tcps_raddr,

curpsinfo->pr_psargs

);

title--;

}

tcp:::receive

/ ( args[3]->tcps_raddr == ADDR || ADDR == NULL ) && nfs[args[1]->cs_cid] /

{ delta=timestamp-walltime;

walltime=timestamp;

printf("%9d %8d %6d %8s <-- %-8d %8d %8d %12s < %s n",

delta/1000,

args[1]->cs_cid % 100000,

args[1]->cs_pid ,

"",

args[2]->ip_plength - args[4]->tcp_offset,

args[3]->tcps_rack % 10000,

args[3]->tcps_suna % 10000,

args[3]->tcps_raddr,

curpsinfo->pr_psargs

);

title--;

}

output

starting up ...

delta cid pid send recd

570 3904 845 <-- 0

34 3904 845 <-- 140

455 3904 845 <-- 0

24 3904 845 <-- 0

4789720 3904 845 124 -->

82 3904 845 244 -->

99 3904 845 132 -->

delta cid pid send recd

52 3904 845 1448 -->

28 3904 845 1448 -->

24 3904 845 1448 -->

36 3904 845 1448 -->

33 3904 845 1448 -->

26 3904 845 952 -->

86 3904 845 244 -->

212 3904 845 <-- 140

501 3904 845 <-- 132

60 3904 845 124 -->

256 3904 845 <-- 140

72 3904 845 <-- 0

39658 3904 845 <-- 0

What the heck is that huge time 4789720 us? ie 4 secs? The whole operation took me less than 1 second.

I wouldn’t have found the answer to this without help from Adam Levanthal. Turns out that output is in order only per CPU, but different CPUs can output data in different order. Each CPU buffers up data and then passed the buffer back to userland dtrace, so on a one CPU machine, this code will always output in order, but on a multi-cpu machine there is no guarentee on the order of the output. Lets add CPU # to the output:

#!/usr/sbin/dtrace -s

#pragma D option defaultargs

#pragma D option quiet

inline string ADDR=$$1;

dtrace:::BEGIN

{ TITLE = 10;

title = 0;

walltime=timestamp;

printf("starting up ...n");

}

tcp:::send, tcp:::receive

/ title == 0 /

{ printf("%9s %8s %6s %8s %8s %4s n",

"delta" ,

"cid" ,

"pid" ,

"send" ,

"recd" ,

"cpu#"

);

title=TITLE;

}

tcp:::send

/ ( args[3]->tcps_raddr == ADDR || ADDR == NULL ) /

{ nfs[args[1]->cs_cid]=1; /* this is an NFS thread */

delta= timestamp-walltime;

walltime=timestamp;

printf("%9d %8d %6d %8d --> %8s %d n",

delta/1000,

args[1]->cs_cid % 100000,

args[1]->cs_pid ,

args[2]->ip_plength - args[4]->tcp_offset,

"",

cpu

);

title--;

}

tcp:::receive

/ ( args[3]->tcps_raddr == ADDR || ADDR == NULL ) && nfs[args[1]->cs_cid] /

{ delta=timestamp-walltime;

walltime=timestamp;

printf("%9d %8d %6d %8s <-- %-8d %d n",

delta/1000,

args[1]->cs_cid % 100000,

args[1]->cs_pid ,

"",

args[2]->ip_plength - args[4]->tcp_offset,

cpu

);

title--;

}

output

delta cid pid send recd cpu#

42 3904 845 244 --> 0

66 3904 845 124 --> 0

6091 3904 845 124 --> 2

81 3904 845 244 --> 2

96 3904 845 132 --> 2

31 3904 845 1448 --> 2

20 3904 845 1448 --> 2

18 3904 845 1448 --> 2

17 3904 845 1448 --> 2

16 3904 845 1448 --> 2

8910406 3904 845 0 --> 3

375 3904 845 <-- 0 3

24 3904 845 <-- 140 3

34 3904 845 0 --> 3

470 3904 845 <-- 140 3

410 3904 845 <-- 132 3

delta cid pid send recd cpu#

491 3904 845 <-- 140 3

393 3904 845 <-- 0 3

15 3904 845 952 --> 3

36 3904 845 <-- 0 3

delta cid pid send recd cpu#

19 3904 845 <-- 0 3

40167 3904 845 <-- 0 3

what we see is the data ordered by CPU. In other words for each CPU the data is ordered but which CPU get’s printed first is unknown. In dtrace each CPU buffers up it’s data and then sends it to the userland dtrace process.

The only “fix” for now is to add a timestamp and order the data by timestamp.

Unsorted, it looks like:

607858102997348 281 3904 845 124 --> 2 607858103608856 84 3904 845 244 --> 2 607858104125414 delta cid pid send recd cpu# 607858104143731 116 3904 845 132 --> 2 607858104176769 33 3904 845 1448 --> 2 607858104198187 21 3904 845 1448 --> 2 607858104215813 17 3904 845 1448 --> 2 607858104233004 17 3904 845 1448 --> 2 607858104267629 34 3904 845 1448 --> 2 607858104287379 19 3904 845 952 --> 2 607858102716187 11569935 3904 845 <-- 132 3 607858103245377 248 3904 845 <-- 0 3 607858103282421 37 3904 845 <-- 140 3 607858103339076 56 3904 845 244 --> 3 607858103524093 185 3904 845 <-- 140 3 607858103774417 165 3904 845 <-- 132 3 607858103823145 48 3904 845 124 --> 3 607858104027216 204 3904 845 <-- 140 3 607858104387780 100 3904 845 <-- 0 3 607858104401487 13 3904 845 <-- 0 3 607858104520815 119 3904 845 <-- 0 3 607858144436175 delta cid pid send recd cpu# 607858144454625 39933 3904 845 <-- 0 3

sorted it looks like

607858102716187 11569935 3904 845 <-- 132 3 607858102997348 281 3904 845 124 --> 2 607858103245377 248 3904 845 <-- 0 3 607858103282421 37 3904 845 <-- 140 3 607858103339076 56 3904 845 244 --> 3 607858103524093 185 3904 845 <-- 140 3 607858103608856 84 3904 845 244 --> 2 607858103774417 165 3904 845 <-- 132 3 607858103823145 48 3904 845 124 --> 3 607858104027216 204 3904 845 <-- 140 3 607858104125414 delta cid pid send recd cpu# 607858104143731 116 3904 845 132 --> 2 607858104176769 33 3904 845 1448 --> 2 607858104198187 21 3904 845 1448 --> 2 607858104215813 17 3904 845 1448 --> 2 607858104233004 17 3904 845 1448 --> 2 607858104267629 34 3904 845 1448 --> 2 607858104287379 19 3904 845 952 --> 2 607858104387780 100 3904 845 <-- 0 3 607858104401487 13 3904 845 <-- 0 3 607858104520815 119 3904 845 <-- 0 3 607858144436175 delta cid pid send recd cpu# 607858144454625 39933 3904 845 <-- 0 3

so now the strange long time delta is at the beginning where I’d expect it.

I’m not quite sure how to deal with this. Post processing the data by sorting the timestamp column works, but interactively processing the data to get it in the right order as it comes out seems problematic.

Jumbo Frames

Jumbo frames can have a significant impact in latency, though jumbo frames are often difficult. Implementing jumbo frames on a machine can cause the machine network communications to hang if a machine or switch on that connection doesn’t support jumbo frames. Modifying switches to support jumbo frames generally requires taking the switch offline which may not be feasible.

Below is a comparison between standard MTU of 1500 bytes and jumbo frame MTU of 9000. IN n this case the jumbo frame connection is twice as fast.

I think using throughput divided by the blocksize as a base target for measure of latency is fraught with danger.

Throughput is measured simply as how fast a certain amount of data goes from A to B. It omits all the other “niceties” of TCP/IP, of which the MTU is one of the most relevant.

When we are dealing with 1KB/10us potential timeframes, things like the speed at which the actual controller hardware can acknowledge and follow-up each and every one of the MTU blocks becomes very relevant. 10us is right up there with CPU cycle speed in network card controllers (no, they are not intel core i7s…)and it takes quite a few of those cycles to process each MTU before attending to the next.

The influence of largeframe (larger MTU) networking configs cannot be overstated here. If the purpose is to transfer large amounts of data, then a larger frame makes for less interference of MTU process time within overall time. Whereas in pure latency terms (fastest possible response to small amount of transmitted data) a standard MTU is far more desirable. Conflicting requirements!

Let’s not forget that the whole purpose of the choice of a 1400 MTU as the default size harks back to a time in the IT past when block sizes of 512 bytes were “huge”!

Far outscaled nowadays, but seldom if ever changed. More or less the same inferences here as the modern trend to disk sector block sizes of 4K instead of 512 bytes – a size that is a leftover from the very first days of SCSI technology and totally out of proportion to current data and disk sizes. Also the same reasoning behind using hugepages to avoid TLA thrashing for virtual memory paging.

Thanks for a very thought-provoking post, much appreciated.

Hi Noons,

Thanks for stopping by. Yes, jumbo frames can make a big difference. After you posted your comment I added an example of with and without jumbo frames to show how the communication changes and how much it impacted latency. In the particular case jumbo frames was twice as fast.

Indeed. Thanks for the update. However, a word of caution with jumbo frames.

I unfortunately got hit by this last year when we were setting our db servers into separate vlans, with firewalls in-between.

Not all routers, bridges and firewalls can operate properly when jumbo frames are used. A lot have diminished functionality. And things like CNAMEs and such become notoriously harder to get right.

Networking is still in the embryo stages with flexible frame sizing and there are a lot of “hidden features” (if you catch my drift) when one steps outside plain vanilla MTU setups.

Far from saying “do not use them”, though. Simply cautioning that it may cause unexpected sidetracks.