It’s not uncommon for different recommended practices to arise in technical sizing and optimization practices. For many, it’s a compromise between most optimal data and ease of access vs. impact on production environments, which is no different from what we face when sizing Oracle on Azure.

As we know it’s important to lift and shift the workload vs. moving the hardware, we must have as simple way to perform this task. The question comes up repeatedly as to our preferred method of working with a one-week Automatic Workload Repository, (AWR) report to do so. This post will be focused on my opportunity of a small test on how different windows of AWR data collection impacted our recommended architecture and sizing in Azure for Oracle.

The examples used are from real customers, with the database and host information masked to protect the customer’s environments. These customers provided me with both hourly, peak and/or one-week window reports for their AWR workloads.

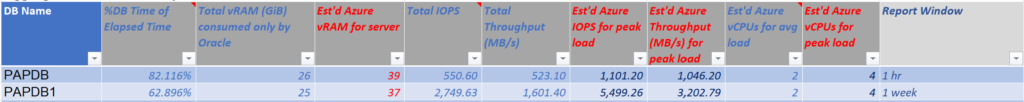

Example 1

Our First example is a RAC database, single node with two submissions- a one-hour AWR and a one-week report from the customer. What you will note is with the one-hour report, the sizing for the host memory and CPU is quite close for both in calculations. Our challenge is that with Oracle on Azure, it’s always about the IOPs in most cases:

In a one-hour report, multiple reports will need to be gathered since we’re more likely to miss:

- What was the peak workload?

- What night-time batch processing occurs?

- What demands do backups put on the database?

- What are the top performance hits over time?

- What long-running processes occur and the calculations from those that run over the one-hour report?

As stated earlier, IOPS is one of the highest concerns with an Oracle workloads on Azure. We have considerable vCPU and memory we can allocate, but then storage needs to be carefully designed to meet the needs of these IO hungry databases. As you can see by our first example, the IOPS doubled between the one-hour report and the one-week report. Also notice there are different values for what is calculated from the values for the average, which are the direct values from AWR and what we calculate for the peak workload. These values are derived with the understanding that these are averages and that there may be workloads that we could be missing in the one-week report and we grant some “wiggle room” in our calculations. This is where the “Peak” values come in and we size for this, never the averages displayed in the worksheet.

These numbers use a separate table that is configurable by workload. A workload that is heavier in memory, CPU or IOPS can be adjusted to grant more leverage to the output, a decision based on the Oracle specialist stating that we expect more workload in a given area.

The sizing for the above example database would fall to a few VM solutions:

- 4 vCPU

- 39 GiB memory

There are significant VMs that have 4 vCPU and 32 GiB in memory. I would then advise my customer which one of the E-series with an “S” designation, (more flexibility in managed disk if we need to stripe more disk for redo, archive logs, etc.) with 32 GiB of memory would be best for their database.

For storage, our calculations state we require:

- 5K IOPS and 3.2K MBPs.

There are only a few managed disk solutions that can provide this. It’s not an exact value and it doesn’t need to be. If you look at the table for storage, you’ll quickly see that this corresponds to only a few solutions for this type of heavy IO workload. Even Ultra Disk is going to be pushed passed its limit:

| Capacity per disk (GiB) | IOPS per disk | Throughput per disk (MB/s) | |

| Mininum | 4 | 100 | 1 |

| Maximum | 65536 | 160000 | 2000 |

This means we automatically bring in a solution that isn’t bound by the individual VM or limits of the managed disk, which for Azure, means Azure NetApp Files, (ANF). Would we have known to bring in this option if we’d worked with the 1-hour AWR report? No- we may have very well recommended to go with premium or ultra disk, missing the fact that the workload will end up demanding an architecture with less restrictions on IO.

This is not to say the customer must go with the recommendations. They could also take up a strong optimization exercise and eliminate the heavy IO on the database and be able to decrease the resource needs in the cloud. As an optimization specialist, I’m never going to turn down this option from anyone towards their long-term satisfaction in the cloud. It’s always about efficient use of your resources, but the first course is to lift and shift the workload as is, which means that is what I need to architect for.

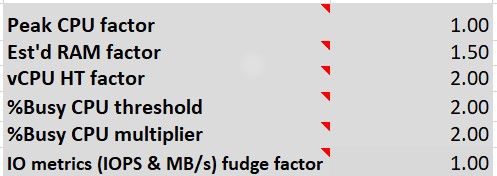

Example 2

This second example is from an average single instance database to be migrated to Azure.

In my workloads, I’ve numbered them to make them unique in the output and there were three that were submitted to Microsoft:

- A 1-hour workload

- A 1-hour peak workload

- A 1-week workload report

Workload can also be a perception thing for those who manage and monitor a database. I’m all about the data for a reason- How often have you met with IT teams and they are sure that a certain time is the busiest time for their application or database and if you turn on activity reporting, a very different picture results. The peak load resulted in less vCPU than the one-week report and the IO ended up mixed- greater MBPs, but less IOPs in the 1-hour peak. When we take the wiggle room into consideration, we can see that we more than cover the peak workload of 9126.86 MBPs, in our one-week peak MBPs of 17156.98 MPBs.

In other words, I would have captured the peak workload in my “wiggle room” that I used as part of my one-week report and again, have satisfied the sizing exercise and sized the Oracle workload for Azure correctly.

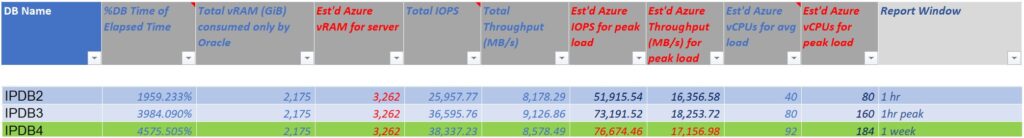

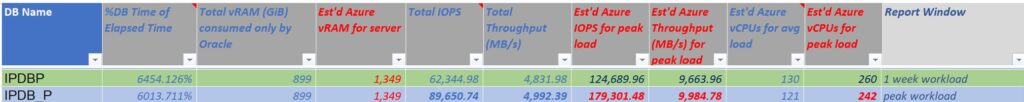

Example 3

The third example is from an Exadata- another large example, but an interesting one. This customer wanted to see the difference between running a unique, peak workload they had run one-time, (which they had an AWR for) and their normal weekly workload and it produced quite mixed results:

As you can see from the above output, the one-week output produced far less in IO but was significantly higher in vCPU requirements. This is where we realize that an identified peak can be peak by one resource and not another. Its about the pain point, rarely across all resources.

In this case, the customer was again requiring significant IOPS and optimization would be required to meet their needs. They were already faced with this from other cloud vendors and that we produced the data for a baseline, along with optimization recommendations were the reason they decided to go with Azure. The plan here is to optimize onprem and then re-evaluate the workload, but again, the important reason to bring up this example is that a peak workload may not provide you with the results that you hoped for.

Again, we look at our workload averages and compare them to the one week workload peak numbers, we more than cover for them, which is the goal with the peak numbers.

And the Consensus Is

Have we lost anything by making the customer run one, one-week report vs. running multiple reports to gather various peaks and/or running queries to collect data on peak workloads?

The answer in 98% of cases is No.

Do we decrease the need for manual human calculations of different workloads to come up with exact numbers for workloads with the one-week method of AWR sizing? Do we decrease the chance for human error by simplifying to a single workload report as well?

Yes and Yes.

Are we using these numbers to size out the storage requirements for Azure NetApp Files, (ANF) or other advanced Azure services or products?

No. Those calculations are done with ANF’s own scripts and are understood by the customer to require a bit more investigation to ensure they get the best performance from the service. Our calculations only tell us that we will need the service to meet the IO needs of the customer’s workload, which is accurate.

The one-week report offers the most optimal and simple sizing solution for estimating workloads for Azure without requiring extensive effort from the customer’s resources, without putting extra pressure on the customer’s databases and simplifies the ability to size out the workload for Azure for those that may not be as familiar with Oracle as some of us.