The request of me today was to start blogging on Oracle Real Application Clusters (RAC) so here we go, down into the rabbit hole! I admit fully to having a love/hate relationship with RAC. I love redundancy, scalability, and instance resiliency in RAC beneficial, but many of the unnecessary complexities and overhead frustrates me. I absolutely come up against traditional OLTP workloads that scream out for RAC to scale to the level they require day-to-day, but also know there’s a ceiling that will be reached that RAC can’t solve, requiring at some point for database design, code and application to most benefit with a redesign. We optimization DBAs see this in the form of concurrency that no amount of additional scaling can assist with. We see waits increase around concurrency and TX- row lock contention, (which I’ll describe a simplified example of later on in this post.)

Oracle Real Application Clusters (RAC) is still one of the most robust instance high-availability and scalability solutions, designed to provide resilience, performance, and continuous service for many Oracle enterprise workloads. Whether deployed on two nodes or a complex multi-node setup, RAC ensures your database infrastructure is both fault-tolerant and responsive under increasing demand. RAC is an essential part of the Maximum Availability Architecture (MAA) recommended practices, (and in my experience) found in about 40% of small to medium Oracle environments, 98% of large enterprise environments and 100% of Exadata engineered systems.

In this post, we’ll walk through the architectural foundation of RAC, configuration essentials, and a real-world transactional scenario that highlights the importance of its shared and synchronized architecture.

Oracle RAC Architecture Overview

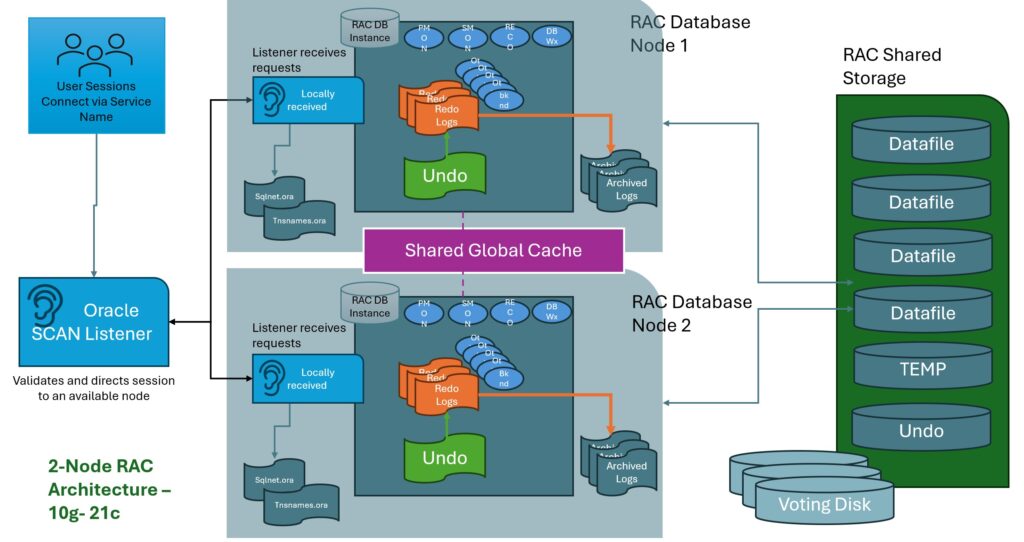

At its core, Oracle RAC allows multiple Oracle instances (each running on a separate server node) to access a single shared database. This setup enables both load balancing and high availability at the instance level. I will stress, in regional cloud environments, Data Guard will still be required to meet many HQ requirements that RAC can’t stretch to meet. Simplified, RAC architecture has two standards:

- Multi-Node RAC: Multiple servers (nodes), each running an Oracle instance, accessing shared database files.

- One-Node RAC (RAC One Node): A special configuration allowing a single-instance database to fail over to a second node in case of a fault—ideal for active/passive configurations or when licensing and resource costs are a concern. The shared storage configuration is still in play here.

Configuration Essentials

Clusterware and Grid Infrastructure

Oracle RAC requires Oracle Grid Infrastructure, which includes:

- Oracle Clusterware: Provides node membership, cluster management, and high availability features.

- Oracle ASM (Automatic Storage Management): Manages shared storage used by all instances in the RAC cluster.

Clusterware manages the entire cluster stack and includes key components:

- Voting Disks: Used to maintain cluster quorum and node membership.

- OCR (Oracle Cluster Registry): Stores cluster configuration information.

A healthy cluster depends on:

- A stable private interconnect between nodes.

- Redundant voting disks and OCR.

- Shared storage for the database and ASM.

When I was instructed in RAC, I was always advised, “Until your cluster is stable and healthy, no further steps were to be taken.” I’ve found this to be solid advice to this day. The foundation for RAC must be in place before building the complexity required for it to perform successfully.

Shared Storage

RAC relies on shared storage that must be accessible to all nodes. This typically includes:

- ASM diskgroups for datafiles, control files, redo logs, and archive logs.

- Tier 1 storage performance and redundancy are essential as all nodes are sharing the storage and poor quality storage will impact the entire cluster.

Global Database & Instance Configuration

Each RAC instance runs on a separate node but connects to the same database files. These instances are linked via:

- SCAN (Single Client Access Name): A cluster-wide listener configuration allowing clients to connect to any node transparently.

- VIPs (Virtual IPs): Assigned per node for client failover. If a node fails, the VIP fails over to a surviving node.

- DNS Resolution plays a part in the connection along with virtual IP Addresses.

- Virtual configurations make it easier for the services to connect via the SCAN Listener to flexibly interact with the RAC database.

Each instance is configured with:

- Instance-specific SID (e.g., dbprod1, dbprod2, dbprod3, dbprod4)

- Global Services that can be configured for:

- Load balancing (round-robin)

- Service affinity (pinning workloads to specific nodes)

Transaction Coordination Across Nodes

To maintain consistency across RAC instances, Oracle synchronizes undo, redo, and data block changes using Cache Fusion. Here’s how it works:

- Undo and Redo Shipping: When one instance modifies a block that another instance holds in its cache, Oracle RAC coordinates a block transfer (using the private interconnect).

- Global Cache Service (GCS): Manages data block access.

- Global Enqueue Service (GES): Manages locks (e.g., TX, TM).

This ensures all changes are coherent across all instances, enabling multiple sessions on different nodes to interact with the same data safely.

Real-World Example: Two Updates, Two Instances

I mentioned in the beginning of this post that I’d walk you through a simplified example of how concurrency can impact a RAC database and how as workload increases on popular objects in the database, transactional row lock contention, (wait event: TX- row lock contention) can occur. Let’s walk through a scenario with two RAC instances (DBprod1 and DBprod2) and a simple example table, employee, which is shared between the two database instances, (and so the users accessing them from the separate instances, as well).

Step 1 – Session 1 (Node DBprod1):

SQL> UPDATE employee SET salary = salary + 1000 WHERE emp_id = 101;

– No COMMIT yet

Step 2 – Session 2 (Node DBprod2):

SQL> UPDATE employee SET salary = salary + 500 WHERE emp_id = 101;

At this point, RAC will perform the following:

- Session 2 will request the data block for emp_id = 101 via Cache Fusion.

- Since DBprod1 has the most recent version (uncommitted), RAC will either:

- Wait for DBprod1 to commit or roll back.

- Block Session 2 to prevent inconsistent updates.

Step 3 – Both Sessions Query After COMMIT:

If Session 1 commits:

- Session 2’s update will proceed and further modify the already-updated row.

- Both sessions querying will reflect the final state after both updates.

If Session 1 does not commit before Session 2 queries:

- Session 2 will not see Session 1’s update.

- It will get the consistent (pre-update) view of the data due to read consistency maintained via undo.

The Critical Role of Failover and Shared Storage

Failover is one of RAC’s primary strengths. If one instance goes down, sessions can reconnect to another instance using the SCAN listener and VIPs—seamlessly.

However, since RAC uses shared storage, the performance and availability of that storage is critical. Any issue with the storage layer impacts all nodes. That’s why Tier 1 storage with redundancy and performance guarantees is non-negotiable in a RAC environment.

Things to Remember

Oracle RAC remains one of the most mature, enterprise-ready solutions for instance high availability and horizontal scaling of databases. But with great power comes complexity:

- Properly configure your Clusterware and shared storage, along with verifying the stability before proceeding to create the database.

- Monitor interconnect latency and block contention.

- Oracle RAC can not scale across cloud regions or data centers. Use the proper secondary solution to protect from outages when designing in the cloud and ensure the solutions are supported.

- Design services to manage workload distribution intelligently.

- Develop applications that are “RAC aware” to allow for automatic reconnect and restart of sessions, (referred to as TAF, i.e. Transparent Application Failover.)

- Understand the intricacies of RAC cache coordination and how transactions are serialized across instances.

- As workloads increase, it’s surprisingly simple to add more nodes to an existing RAC environment, distributing the workload that may be stressing the existing nodes and scaling out, (vs. scaling up with a larger, single machine in a non-clustered environment.)

With these considerations in place, RAC can offer the kind of reliability, resilience, and performance that has impressed many relational database technologists over the years. I’ll continue to post on RAC as part of an ongoing series, but will throw in other tech topics as we go along the next couple months- enjoy!