Abstract Review Tips

Yes, this is for my RMOUG abstract reviewers, but it may help other conferences and user groups, too. We have some incredible content at RMOUG, (Rocky Mtn. Oracle User Group) Training Days conference and its all due to a very highly controlled, thought out process that has evolved over time to ensure that we have an abstract selection process that is as fair as possible and offers new speakers opportunities as well.

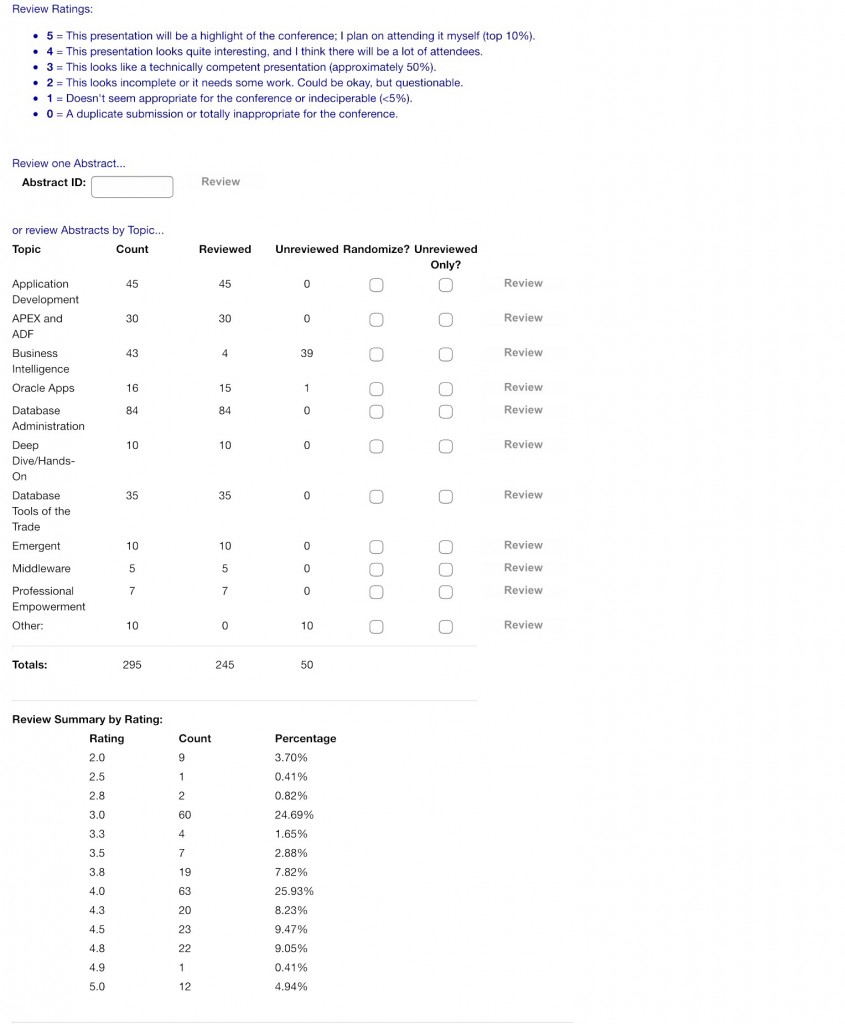

I’m going to share my abstract scoring summary with the rest of the class to start, so you get an idea of how we score. As a strong DBA who has done development and a bit of mobile development, I can go across a number of tracks to perform reviews, but you will definitely notice that I don’t review the following:

1. Almost anything in the BI track.

2. Skip over any in development or other tracks that I’m unsure of.

3. Never score on my own abstracts, (yes, I submit mine to go through the abstract process, but then pull my high scoring ones afterwards and retain them to add to the schedule if we have a last minute cancellation. Tim does the same this last few years.)

You will also notice that I don’t just score whole numbers, but give me more variation in the results by adding more definitive scores.

I carefully inspect the “Review Summary by Rating” to verify that my percentage of scores are somewhere close to what we recommend at the top of the summary page, (seen in blue at the top of the screenshot.)

How to Review

Knowing what to look for and how to score it is important. We are a tech conference, so if an abstract appears to be marketing anything, if you are not high tech, you may find a low score on the abstract.

Our reviewers are asked to look for great content, variety in content, new and interesting topics and they will score an abstract down if it is incomplete or has grammatical/spelling errors. This may seem harsh, but if someone can’t take the time to fill out an abstract, it’s difficult to believe quality time will be put into the presentation.

Why It’s Important

Our reviewers and review scores are crucial to our selection process. This next week, after we close reviews on the 6th of October, we will pull all of the abstracts with their overall average score. We will arrange the track by highest to lowest and then pick out the top scoring abstracts for each track.

1. The percentage of abtracts submitted for that track is the percentage of slots allotted of the available total.

2. We then look for # of high scoring abstracts per speaker across ALL tracks and remove the lowest of theirs till they have two max each, (we only have 100 session slots available and we want everyone to have as much an opportunity to speak as possible!)

3. I then have marked in comments for anyone I see as a new speaker. We see how the abstract scored and see if we can add them freely to the schedule or if they may require mentoring from a senior speaker from the conference. We add in these new speakers to the openings made by step#2.

4. We build out the schedule into tracks, so that no matter what your specialty area, you should always have something incredible to attend.

5. Those great scored abstracts I had to hold back as the speaker already had two accepted? I retain those, along with mine and Tim’s to use as fillers if there is a last minute cancellation.

I’ve just finished my reviews and I’m incredibly impressed with the abstracts this year! The quality of the abstracts and variety of impressive topics can only mean that its going to be the BEST RMOUG Training Days EVER!!

Pingback: Abstract Review Tips - Oracle - Oracle - Toad World