AWR Warehouse Fails on Upload- No New Snapshots

This issue can be seen in either EM12c or EM13c AWR Warehouse environments. It occurs when there is a outage on the AWR Warehouse and/or the source database that is to upload to it.

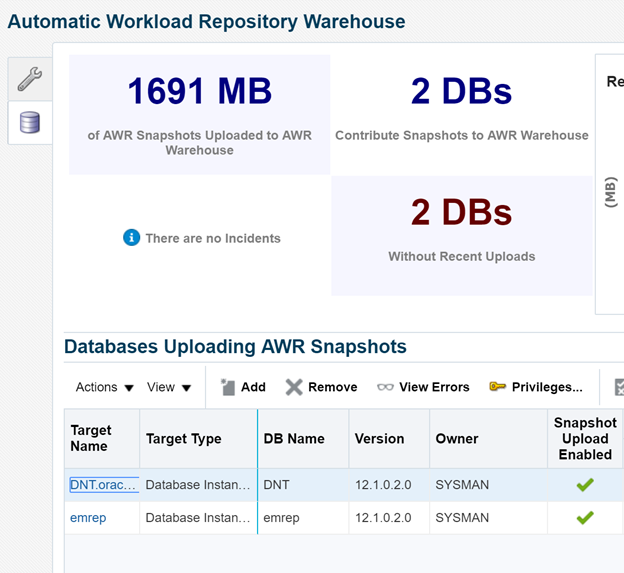

The first indication of the problem, is when databases appear to not have uploaded once the environments are back up and running.

The best way to see an upload, from beginning to end is to highlight the database you want to load manually, (click in the center of the row, if you click on the database name, you’ll be taken from the AWR Warehouse to the source database’s performance home page.) Click on Actions, Upload Snapshots Now.

A job will be submitted and you’ll be aware of it by a notification at the top of the console:

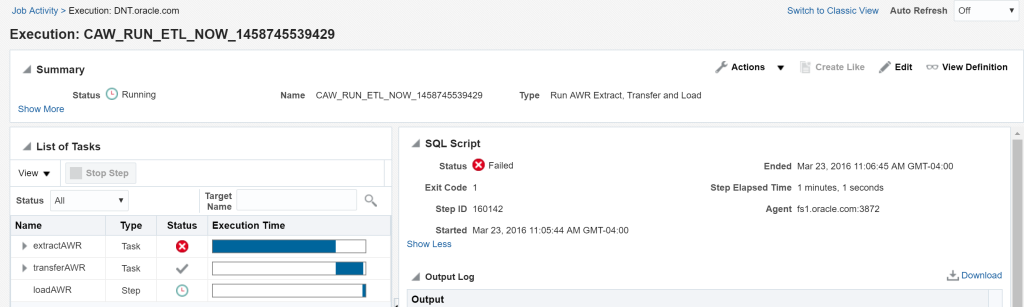

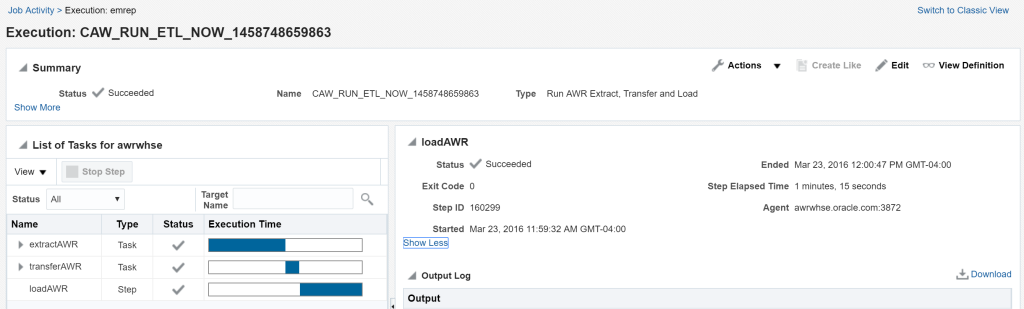

Click on the View Job Details and you’ll be taken to the job that will run all steps of the AWR Warehouse ETL-

- Inspect what snapshots are required by comparing the metadata table vs. what ones are in the source database.

- Perform a datapump export of those snapshots from the AWR schema and update the metadata tables.

- Perform an agent to agent push of the file from the source database server to the AWR Warehouse server.

- Run the datapump import of the database data into the AWR Warehouse repository, partitioning by DBID, snapshot ID or a combination of both.

- Update support tables in the Warehouse showing status and success.

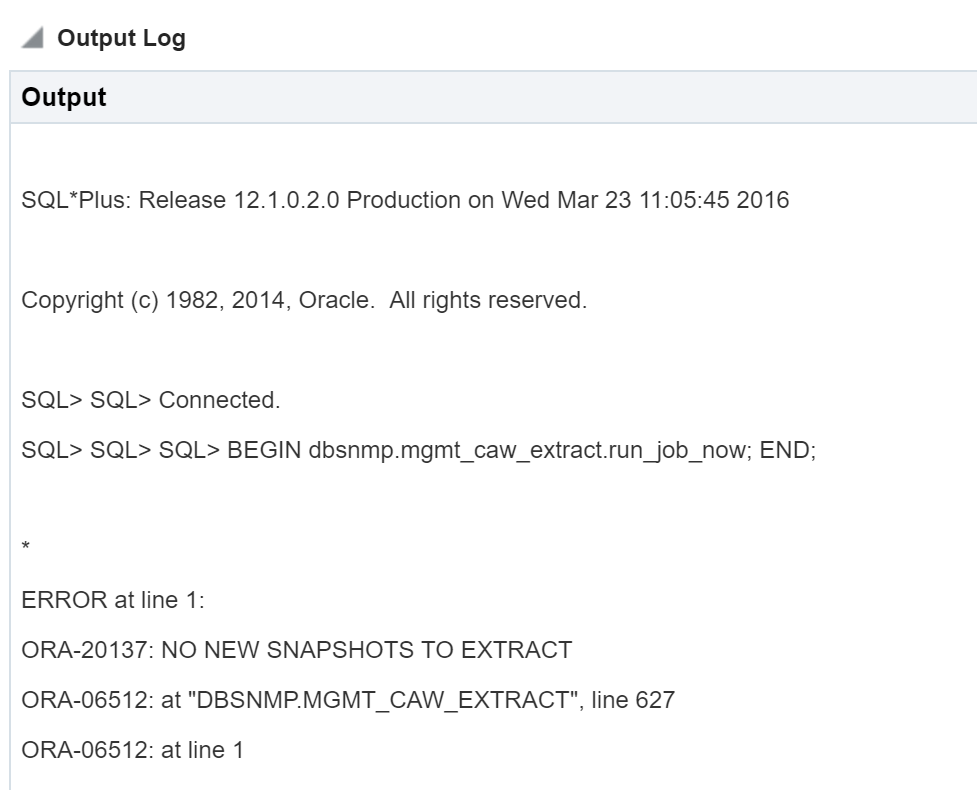

Now note the steps where metadata and successes are updated. We’re now inspecting the job that we’re currently running to update our tables, but instead of success, we see the following in the job logs:

We can clearly see that the extract, (ETL step on the source database to datapump the AWR data out) has failed.

Scrolling down to the Output, we can see the detailed log to see the error that was returned on this initial step:

ORA-20137: NO NEW SNAPSHOTS TO EXTRACT.

Per the Source database, in step 1, where it compares the database snapshot information to the metadata table, it has returned no new snapshots that should be extracted. The problem, is that we know on the AWR Warehouse side, (seen in the alerts in section 3 of the console) there are snapshots that haven’t been uploaded in a timely manner.

How to Troubleshoot

First, let’s verify what the AWR Warehouse believes is the last and latest snapshot that was loaded to the warehouse via the ETL:

Log into the AWR Warehouse via SQL*Plus or SQLDeveloper and run the following query, using the CAW_DBID_MAPPING table, which resides in the DBSNMP database:

SQL> select target_name, new_dbid from caw_dbid_mapping;

TARGET_NAME -------------------------------------------------------------------------------- NEW_DBID ---------- DNT.oracle.com 3695123233 cawr 1054384982 emrep 4106115278

and what’s the max snapshot that I have for the database DNT, the one in question?

SQL> select max(dhs.snap_id) from dba_hist_snapshot dhs, caw_dbid_mapping cdm 2 where dhs.dbid=cdm.new_dbid 3 and cdm.target_name='DNT.oracle.com'; MAX(DHS.SNAP_ID) ---------------- 501

The Source

These next steps require querying the source database, as we’ve already verified the latest snapshot in the AWR WArehouse and the error occurred on the source environment, along with where it failed at that step in the ETL process.

Log into the database using SQL*Plus or another query tool.

We will again need privileges to the DBSNMP schema and the DBA_HIST views.

SQL> select table_name from dba_tables where owner='DBNSMP' and table_name like 'CAW%'; TABLE_NAME -------------------------------------------------------------------------------- CAW_EXTRACT_PROPERTIES CAW_EXTRACT_METADATA

These are the two tables that hold information about the AWR Warehouse ETL process in the source database.

There are a number of ways we could inspect the extract data, but the first thing we’ll do is get the last load information from the metadata table, which will tell us what were the

SQL> select begin_snap_id, end_snap_id, start_time, end_time, filename from caw_extract_metadata where extract_id=(select max(extract_id) from caw_extract_metadata); 502 524 23-MAR-16 10.43.14.024255 AM 23-MAR-16 10.44.27.319536 AM 1_2EB95980AB33561DE053057AA8C04903_3695123233_502_524.dmp

So we can see that per the metadata table, the ETL BELIEVES it’s already loaded the snapshots from 502-524.

We’ll now query the PROPERTIES table that tells us where our dump files are EXTRACTED TO:

SQL> select * from caw_extract_properties 2 where property_name='dump_dir_1'; dump_dir_1 /u01/app/oracle/product/agent12c/agent_inst

ls /u01/app/oracle/product/agent12c/agent_inst/*.dmp 1_2EB95980AB33561DE053057AA8C04903_3695123233_502_524.dmp

So here is our problem. We have a dump file that was created, but never performed the agent to agent push or load to the AWR Warehouse. As the source table was updated with the rows to the METADATA table, it now fails to load these rows.

Steps to Correct

- Clean up the dump file from the datapump directory

- Update the METADATA table

- Rerun the job

cd /u01/app/oracle/product/agent12c/agent_inst rm 1_2EB95980AB33561DE053057AA8C04903_3695123233_502_524.dmp

Note: You can also choose to rename the extension in the file if you wish to retain it until you are comfortable that everything is successfully loading, but be aware of size constraints in your $AGENT_HOME directory. I’ve seen issues due to space constraints.

Log into the database and remove the latest row update in the metadata table:

select extract_id from caw_extract_metadata where being_snap_id=502 and end_snap_id=504; 101

delete from caw_extract_metadata where extract_id=101; 1 row deleted. commit;

Log into your AWR Warehouse dashboard and run the manual Upload Snapshots Now for the database again.

anyway to change the dump directory? /u01/app/oracle/product/agent12c/agent_inst

it’s a real pain not able to change it… should not be a complicated change? probably need to modify MGMT_CAW_EXTRACT and the perl script which does the transfer?

I’ve just been asked to work on this exact issue. I’ll let you know soon so watch my blog for a new post on this topic!

Thanks,

Kellyn