Azure Automation of A-to-Z, Part I

DevOps deployments and automation have numerous tools at their disposal, but most often, scripting is required. Although I’m a Microsoft Azure fanatic, I am also a strong advocate of Linux and with my two decades on Unix, I strongly prefer BASH over PoSH. I find the maturity of BASH and KSH highly attractive over PoSH and with my experience, I’m simply more skilled with shells native to the Linux OS.

Before we get too far in, I know some of the text can be difficult to read and/or you want to copy and paste or you want some of the scripts for yourself. All of this can be found on the following GitHub Repository.

Do the (Shell) Thing, Julie

As you learn to write shell scripts, no matter the choice in scripting, it’s critical to learn how to reuse code to:

- Lesson the amount of code to maintain.

- Help establish standards in coding practices.

- Establish centralized locations for scripts, as reuse of existing script often assists in this.

Taking a project that’s near and dear to my heart, we’ll use the Higher Education Solution that was first built out by my team with Patrick LeBlanc, Steve Pontello, Jason Morales and Dustin Ryan. The goal is to have an end-to-end solution that can be deployed, (originally on-premises, subsequently I’ve been working to move to Azure) for any customer as part of a POC. It provides the customer with the following:

- PaaS databases- staging and data warehouse.

- Firewall rules to connect the Azure CLI to the PaaS databases.

- Analysis Services Semantic Model for scalability and governance.

- Azure Data Factory to manage SSIS packages via pipelines, scheduled and management.

- Sample data via CSV files, but can be used as examples for the customer to create versions of the files for their own data loads.

- ELT process to load data into staging and then to the data warehouse.

- Logical objects in staging and the data warehouse.

- On-premises Data Gateway to connect to Power BI Desktop to the Azure environments.

- Three Power BI beginning dashboards to grant beginning insight into the data and a starting point for customers to work from.

This is a large undertaking, as we’re not talking about standard, “deploy some servers and turn it over to the DBAs to do their thang” or “import some data and then turn it over to the developers” kind of thang. This is a beginning to end solution for customers that I want to be able to run a script, answer a few questions asked interactively of it and then have it deployed completely to their Azure environment and onto their desktop. The customer should then be able to publish the Power BI reports up to the web to let others get a taste of what they can do with just the flip of a switch.

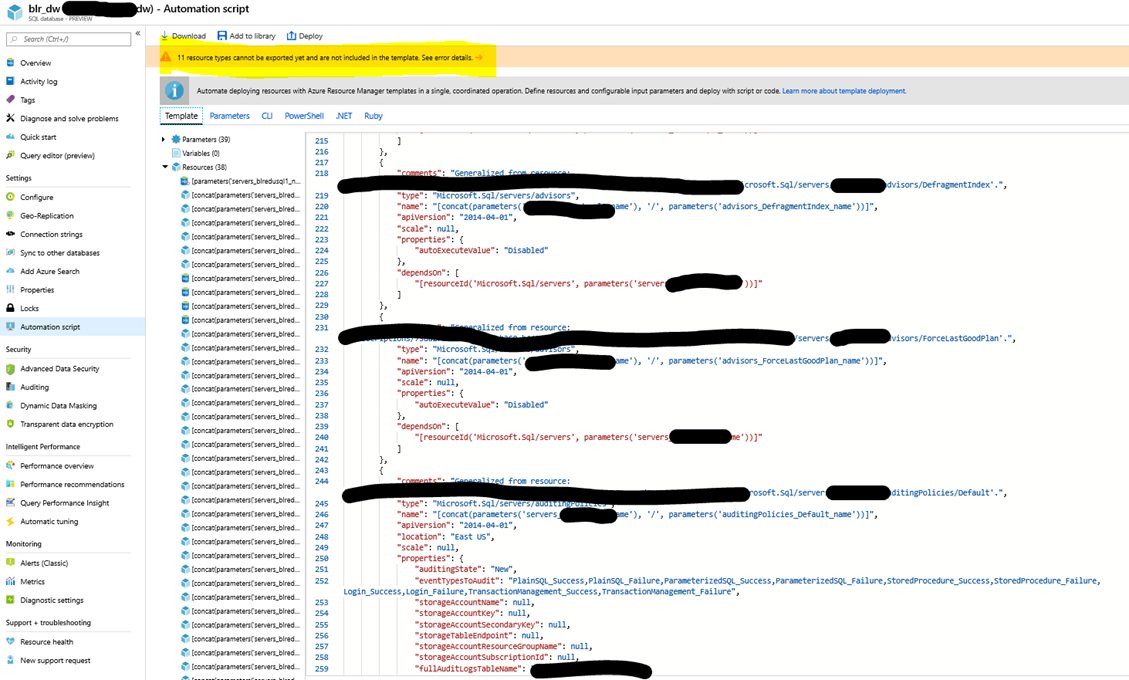

If you’re looked at pulling automation scripts from Azure, you’ll notice it can be PoSH or BASH with LARGE JSON template and parameter files. These files aren’t built with the goal of dynamic builds or are they easy to maintain. Due to this, I looked towards the Azure CLI and BASH and created my own. I also deployed everything into a single resource group to simplify management, scaling out and removal if after the POC, the customer isn’t ready yet to deploy into their environment.

Part A, Introduction are Important

This was rather simple, as it’s common practice to deploy VMs with Azure DB/SQL Server and/or SQL Server/Azure DB in PaaS. The goal was to remove the heavy dependence on JSON files. If you were to log into the Azure Portal, you would see the amount of JSON that is created as part of the automation scripts.

Now the script under the CLI tab is sufficient for recreating the environment “as is” but remember, I wanted this to be interactive and add the capability to offer customers the ability to meet their naming conventions, etc. Keeping as close to the format and design of the script that Microsoft has offered as part of the automation scripts, but enhancing them to meet my goals is the right thing to do. It results in something that is recognizable by Microsoft support and still keeps to our focus.

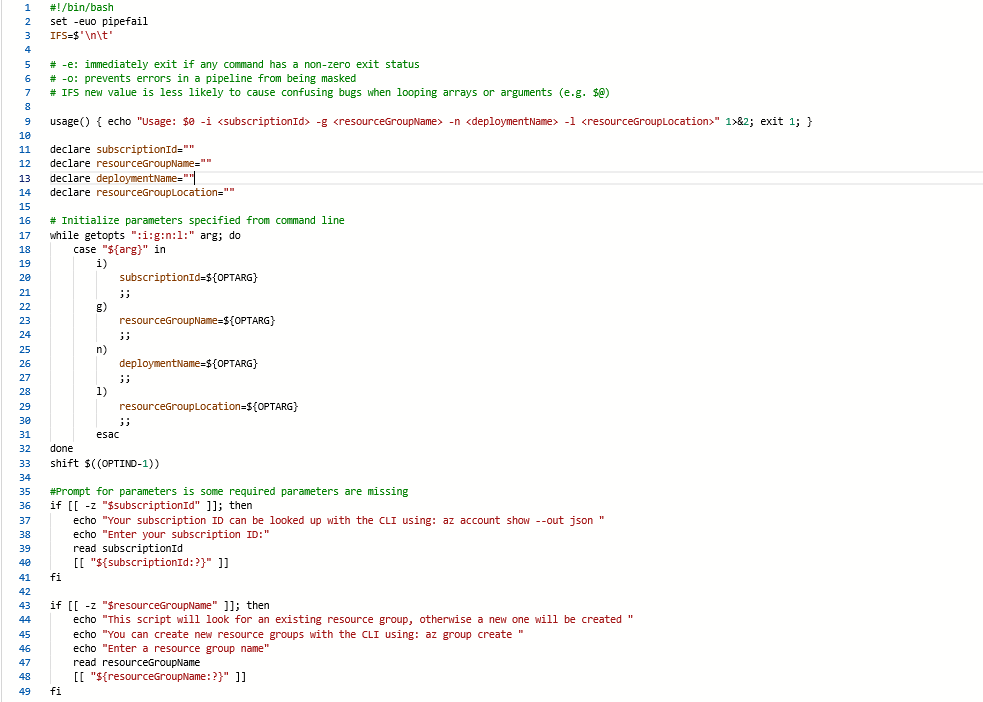

Where their script begins like this:

My Turn

My script doesn’t veer off course from the original. I’ve added clear comments to help the user understand the goal, usage and steps, but notice the similarities:

So, let’s talk about all these additions and why the introduction to a script is as important as an introduction to a paper or story.

As with the script from Microsoft, I’m starting with a reminder to the user executing the script that there are requirements for the usage of the script and if the execution doesn’t meet those, the script will simply exit to protect from missing arguments if they aren’t supplied with the USAGE() step.

We then declare the arguments passed for the arguments will be used to populate those arguments. What’s interesting is that we will ask the user questions interactively to fulfill these arguments as we proceed into the beginning of the script execution, using the WHILE GETOPTS step.

I’m going to skip over the minimal JSON template and parameters that are used by this script in this post, as I want to focus on the physical deployment outside of the Azure Data Factory and the Azure Analysis Services, (another post we’ll come back to this).

Dynamic Pull and Requests

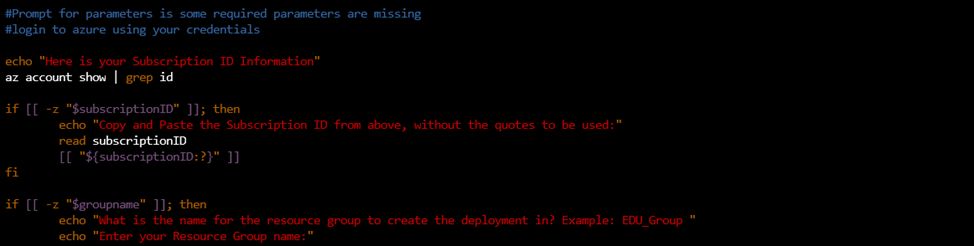

The next step in the script, we’ll display how we locate the customer’s Azure subscription ID using the AZ ACCOUNT SHOW command. The current subscription ID is returned and the customer is asked to copy and paste it into the prompt. The reason for this is customers may want to use a different one that they are currently using and this gives them the ability to view what subscription they are logged into and place a different one into this step.

Next, I ask the customer to create a unique RESOURCE GROUP to own all the resources we’re about to deploy. This simplifies the monitoring, maintenance and management of the deployment. The next step is to create two passwords, one for the SQL Server and the next for their Analysis Server. I give examples and let the user know that if they don’t follow password requirements, the deployment will fail.

The last two, initial questions ask the customer to create a name for their SQL Server host and to choose from a list the Azure location zone for the resources to be created in. I use the AZ ACCOUNT LIST-LOCATIONS and this is a step where BASH comes in handy, as I have a simple GREP and AWK to filter what is returned to the prompt to choose from.

Last of the Introduction

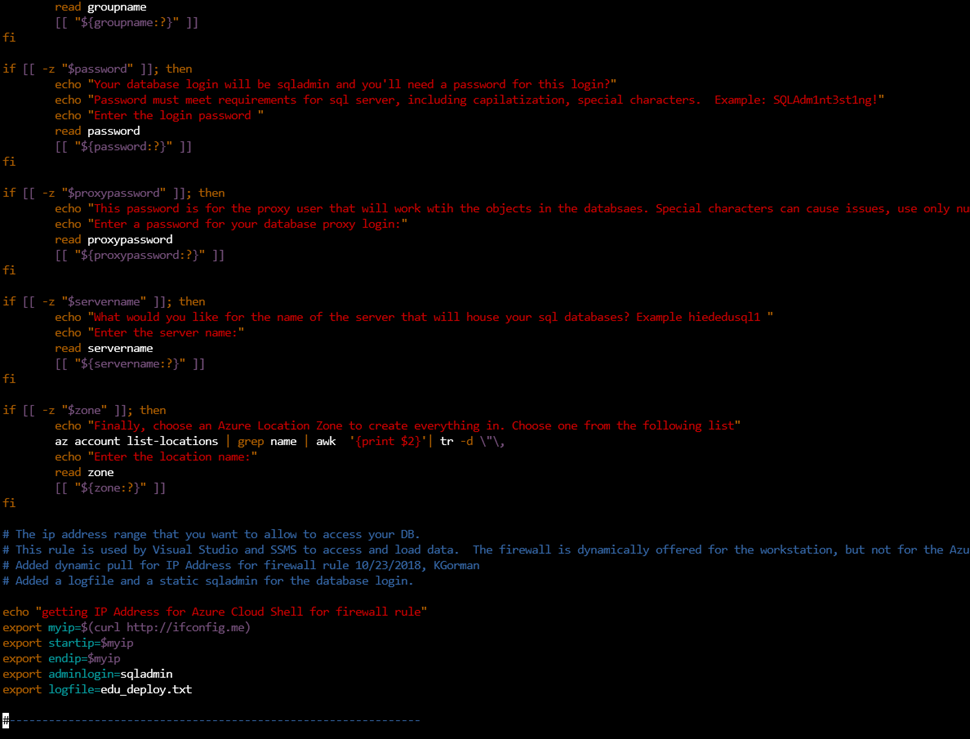

To finish up today’s investigation into this script is the firewall rule. Now most tools, including SQL Server Management Studio, (SSMS) and Visual Studio, offer to create a firewall rule for your workstation upon logging into the Azure resource, but with the Azure Cloud Shell, this needs to be created to log into the SQL Server environment. My script uses ifconfig.me with a CURL command to capture the IP Address and use it as the beginning and end IP for a firewall rule that it will create for the Azure Cloud Shell to do the subsequent scripts that we’ll begin to walk through in the next post.

Notice that last lines I’ve pasted here, lets you know we’re not going to fool around with our login user, it’s going to be SQLADMIN that’s created and also displays the LOGFILE file name that will be used. As customers will be creating these entries as they execute the script, it should only be expected that they’ll forget the entries or have a typo. Having the output entered into a log file, along with success of each step is a huge benefit for them. Also note, although there’s been a lot of enhancements to the original script from Microsoft, there’s also a lot about it that’s very similar in format and steps. This is important as we begin to build out, in the end, a wrapper script for a number of deployment options for my group.

Pingback: Dew Drop – January 30, 2019 (#2888) | Morning Dew