I know, I know- there’s a number of you out there thinking-

I’m being brought in on more and more of these projects due to a recent change for some Exadata backup components many companies kept onsite, (parts that wear out more often, like PDUs and cell disks) which are no longer an option and that moving to Azure is a viable option for these workloads if you know what to identify and address before the move to the cloud.

Engineered, Not Iron

An Exadata is an engineered system- database nodes, secondary cell nodes, (also referred to as storage nodes/cell disks), InfiniBand for fast network connectivity between the nodes, specialized cache, along with software features such as Real Application Clusters, (RAC), hybrid columnar compression, (HCC), storage indexes, (indexes in memory) offloading technology that has logic built into it to move object scans and other intensive workloads to cell nodes from the primary database nodes. There are considerable other features, but understanding that Exadata is an ENGINEERED system, not a hardware solution is important and its both a blessing and a curse for those databases supported by one. The database engineer must understand both Exadata architecture and software along with database administration. There is an added tier of performance knowledge, monitoring and patching that is involved, including knowledge of the Cell CLI, the command line interface for the cell nodes. I could go on for hours on more details, but let’s get down to what is required when I am working on a project to migrate an Exadata to Azure.

Focus on the Right Thing

I discovered that many times, it was natural for many to focus on the engineered system resources and attempt to duplicate this in Azure. Although there are numerous features that are similar to Exadata in Azure Data Warehouse, it’s still not the correct path to focus on the engineered system.

Focus on the Database Workload.

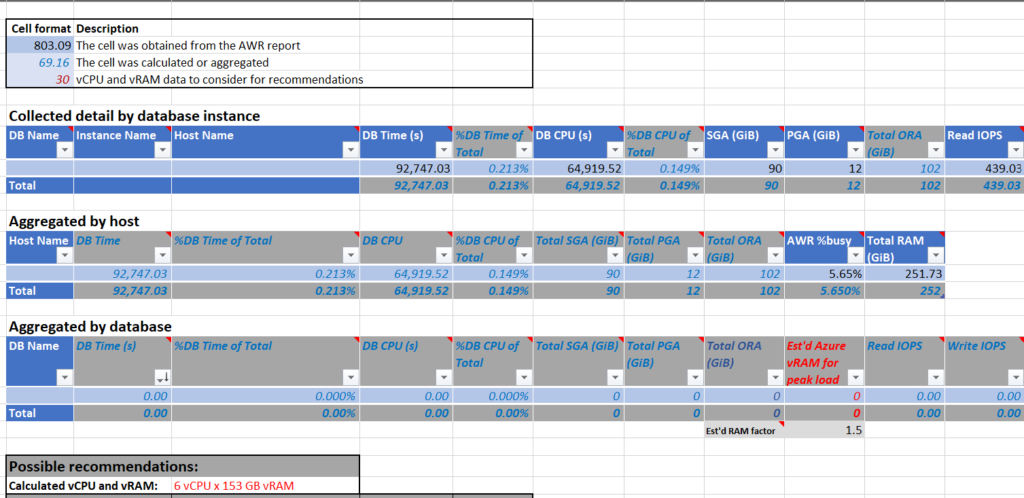

Oracle offers us this in the way of an Automatic Workload Repository, (AWR) report. This data is always being collected, so no extra workload will be requested on the production system. Simply generate the report on the repository and review the information provided on CPU, memory, IO, along with any red flags that should be addressed before migrating to the cloud.

It allows us to break down workloads by databases. For most Exadata investments, there was one or two databases that were the primary reason for the Exadata and the main consumer of resources. By isolating by database, it allows for better sizing, pricing and licensing cost.

With the breakdown by database, we’re also evolving a spreadsheet with the information we need to calculate the Azure resources that would be needed in a lift and shift. This makes it simpler to consume by the customer if they’re interested, too.

Its an Exadata Thing

The next step is to identify what Exadata specific features are in play that won’t come over in a lift/shift-

- Storage indexes that will need to be physically created that currently only exist in memory for smart scans.

- What offloading to cell nodes happens currently and will require faster IO and added resources to support on the IaaS cloud solution?

- What HCC is in place and the added IO pressure on the system once it no longer exists? What compression options are available in Oracle on IaaS for the customer to take advantage of?

- What objects, etc. reside in the different caches? Flash Cache for recently used objects must be listed and tested for latency.

- Was the SGA shrunk to force offloading and does this mean the new databases require more memory than what is currently allocated?

- If using RAC, (expected 98% of time on Exadata) is there a plan to stay RAC and if so, is it justified and if not, ensure to calculate for the savings from global cache no longer needed, but total for the needs of each node’s current workload.

- Flash log– without this temporary store for low-latency redo log writes, latency impacts must be identified and optimized through other means.

All of this data is captured in some form, within the AWR reports and via queries from the Cell CLI. I commonly request a one week snapshot report of AWR from each database on the Exadata. If there are monthly, quarterly or yearly reports, this can be addressed farther into the project, but gaining an idea of the workloads for each of the databases is my first step.

- Enter in all pertinent data into the spreadsheet, including OS, db version, RAC/Non-RAC, memory, CPU/Core, etc.

- Trap the percentage of CPU, memory and IO being used by the workload vs. what is available.

- Review the AWR and perform a database health check, including any recommendations for issues that should be addressed before the database is moved to the cloud.

- Identify any areas that need deeper investigation- odd block sizes, non-default parameter settings, running maintenance/admin jobs that need to be addressed, etc.

I do provide a full healthcheck report of the databases. Its not something they often receive, so to have this review is incredibly beneficial to their long-term satisfaction with their cloud migration. Who wants to migrate over a problem with their database? Resolving it before the cloud migration saves everyone time, money and headaches.

Second phase is to run Exadata specific collections:

- Query the cell cli for offloading percentages

- Pull the flashcache information from the AWR

- Query the amount of flash log that is in use and how often

- Query the objects that are currently using HCC, along with compression ratios, uncompressed sizes, IO percentages for workload

- Identify storage indexes used for smart scans. This includes columns and object name they reside on.

- Inspect the PGA, is there tons of multi-pass or is the PGA sized correctly? Will this be sufficient once off of Exadata where some hash joins may be run on cell nodes?

With this data collected, the next step is unique to the customer database, but most often requires:

- Physical indexes created in the production database, but left invisible and only made visible after the migration to Azure.

- Implement new compression options that are available in the version of Oracle in Azure.

- Resize the SGA and PGA to address any shrinking that was done on the Exadata.

- Pin objects in memory and other cache features for better performance.

- Optimize any SQL that relied on the Exadata offloading to exist previously, where optimization should have been the first route taken.

- Place redo logs on ultra disk if required

- If there is a specific workload that was using high degree of parallelism,(DOP) to run, it may be time to discuss an Azure DW solution to process the data using Azure Data Factory to push the ELT. Using the right tool for the job is always preferable and there are times where the massive parallel processing and ability to offload to a solution that can handle the workload long term without impacting the existing data store is preferential.

Testing Without Features on Exadata

There are numerous ways you can “shut off” Exadata features. If the space exists on the Exadata or a test database(s) exist, the following parameter changes can be used to do this or it can be done as an optimization process as part of the lift and shift.

Stop offloading to cell nodes, disable bloom filters and disable storage indexes:

alter system set cell_offload_processing=false; alter system set _BLOOM_FILTER_ENABLED=false; alter system set _KCFIS_STORAGEIDX_DISABLED=true;

You can then verify offloading to cell nodes is no longer occurring with the following query:

select a.name, b.value/1024/1024 MB from v$sysstat a, v$sysstat b

where a.statistics#=b.statistics# and (a.name in ('physical read total bytes',

'physical write total bytes', 'cell IO uncompressed bytes')

or a.name like 'cell phy%');

The results should no longer show cell activity once this is put into place and you can check offloading and smart scans. SGA can be increased and other features will still be in play, but this can then be a step by step process as we migrate to the cloud. It also gives us the ability to shut off features and test in a controlled situation.

I won’t say migrating off of Exadata is easy, but I will say it’s easily doable. The main goal is to ensure that customers are satisfied in the long run and this is where the steps you see in this post are important. It’s not as much a lift and shift as it is a lift and build to the cloud. This would be a requirement in going to any non-Exadata solution and this one will save the customer money and grant them the scalability they didn’t have with an on-prem engineered solution.

One comment on “Migrating Oracle Exadata Workloads to Azure”

Comments are closed.